From f5cbec66817b643f732f11cdc7b3a550963b70dc Mon Sep 17 00:00:00 2001

From: 99Franklin <358622371@qq.com>

Date: Mon, 30 Dec 2024 19:30:31 +0800

Subject: [PATCH] add OCRBench v2

---

OCRBench/README.md | 58 +

example.py => OCRBench/example.py | 0

{images => OCRBench/images}/GPT4V_Gemini.png | Bin

{images => OCRBench/images}/all_data.png | Bin

OCRBench/{ => json_files}/FullTest.json | 0

OCRBench/{ => json_files}/OCRBench.json | 0

{scripts => OCRBench/scripts}/GPT4V.py | 0

{scripts => OCRBench/scripts}/Genimi.py | 0

{scripts => OCRBench/scripts}/LLaVA1_5.py | 0

{scripts => OCRBench/scripts}/MiniMonkey.py | 0

{scripts => OCRBench/scripts}/blip2.py | 0

.../scripts}/blip2_vicuna_instruct.py | 0

{scripts => OCRBench/scripts}/bliva.py | 0

{scripts => OCRBench/scripts}/interlm.py | 0

{scripts => OCRBench/scripts}/interlm2.py | 0

{scripts => OCRBench/scripts}/internvl2_s | 0

{scripts => OCRBench/scripts}/intervl.py | 0

{scripts => OCRBench/scripts}/llavar.py | 0

.../scripts}/mPLUG-DocOwl15.py | 0

{scripts => OCRBench/scripts}/mPLUG-owl.py | 0

{scripts => OCRBench/scripts}/mPLUG-owl2.py | 0

{scripts => OCRBench/scripts}/minigpt4v2.py | 0

{scripts => OCRBench/scripts}/monkey.py | 0

{scripts => OCRBench/scripts}/qwenvl.py | 0

{scripts => OCRBench/scripts}/qwenvl_api.py | 0

OCRBench_v2/README.md | 84 +

OCRBench_v2/eval_scripts/IoUscore_metric.py | 91 +

OCRBench_v2/eval_scripts/TEDS_metric.py | 931 +

.../IoUscore_metric.cpython-310.pyc | Bin 0 -> 2739 bytes

.../__pycache__/TEDS_metric.cpython-310.pyc | Bin 0 -> 27162 bytes

.../page_ocr_metric.cpython-310.pyc | Bin 0 -> 1416 bytes

.../__pycache__/parallel.cpython-310.pyc | Bin 0 -> 2166 bytes

.../spotting_metric.cpython-310.pyc | Bin 0 -> 4248 bytes

.../__pycache__/vqa_metric.cpython-310.pyc | Bin 0 -> 5347 bytes

OCRBench_v2/eval_scripts/eval.py | 381 +

OCRBench_v2/eval_scripts/get_score.py | 125 +

OCRBench_v2/eval_scripts/page_ocr_metric.py | 50 +

OCRBench_v2/eval_scripts/parallel.py | 50 +

.../eval_scripts/spotting_eval/__init__.py | 0

.../__pycache__/__init__.cpython-310.pyc | Bin 0 -> 178 bytes

.../__pycache__/__init__.cpython-39.pyc | Bin 0 -> 162 bytes

.../rrc_evaluation_funcs_1_1.cpython-310.pyc | Bin 0 -> 15564 bytes

.../rrc_evaluation_funcs_1_1.cpython-39.pyc | Bin 0 -> 15753 bytes

.../__pycache__/script.cpython-310.pyc | Bin 0 -> 10895 bytes

.../__pycache__/script.cpython-39.pyc | Bin 0 -> 10798 bytes

OCRBench_v2/eval_scripts/spotting_eval/gt.zip | Bin 0 -> 236 bytes

.../spotting_eval/gt/gt_img_0.txt | 6 +

.../eval_scripts/spotting_eval/readme.txt | 26 +

.../eval_scripts/spotting_eval/results.zip | Bin 0 -> 1596 bytes

.../spotting_eval/rrc_evaluation_funcs_1_1.py | 456 +

.../eval_scripts/spotting_eval/script.py | 451 +

.../script_test_ch4_t4_e1-1577983164.zip | Bin 0 -> 113280 bytes

.../eval_scripts/spotting_eval/submit.zip | Bin 0 -> 158 bytes

.../spotting_eval/submit/res_img_0.txt | 1 +

OCRBench_v2/eval_scripts/spotting_metric.py | 184 +

OCRBench_v2/eval_scripts/vqa_metric.py | 282 +

OCRBench_v2/pred_folder/internvl2_5_26b.json | 149267 +++++++++++++++

OCRBench_v2/requirements.txt | 12 +

README.md | 48 +-

59 files changed, 152469 insertions(+), 34 deletions(-)

create mode 100644 OCRBench/README.md

rename example.py => OCRBench/example.py (100%)

rename {images => OCRBench/images}/GPT4V_Gemini.png (100%)

rename {images => OCRBench/images}/all_data.png (100%)

rename OCRBench/{ => json_files}/FullTest.json (100%)

rename OCRBench/{ => json_files}/OCRBench.json (100%)

rename {scripts => OCRBench/scripts}/GPT4V.py (100%)

rename {scripts => OCRBench/scripts}/Genimi.py (100%)

rename {scripts => OCRBench/scripts}/LLaVA1_5.py (100%)

rename {scripts => OCRBench/scripts}/MiniMonkey.py (100%)

rename {scripts => OCRBench/scripts}/blip2.py (100%)

rename {scripts => OCRBench/scripts}/blip2_vicuna_instruct.py (100%)

rename {scripts => OCRBench/scripts}/bliva.py (100%)

rename {scripts => OCRBench/scripts}/interlm.py (100%)

rename {scripts => OCRBench/scripts}/interlm2.py (100%)

rename {scripts => OCRBench/scripts}/internvl2_s (100%)

rename {scripts => OCRBench/scripts}/intervl.py (100%)

rename {scripts => OCRBench/scripts}/llavar.py (100%)

rename {scripts => OCRBench/scripts}/mPLUG-DocOwl15.py (100%)

rename {scripts => OCRBench/scripts}/mPLUG-owl.py (100%)

rename {scripts => OCRBench/scripts}/mPLUG-owl2.py (100%)

rename {scripts => OCRBench/scripts}/minigpt4v2.py (100%)

rename {scripts => OCRBench/scripts}/monkey.py (100%)

rename {scripts => OCRBench/scripts}/qwenvl.py (100%)

rename {scripts => OCRBench/scripts}/qwenvl_api.py (100%)

create mode 100644 OCRBench_v2/README.md

create mode 100644 OCRBench_v2/eval_scripts/IoUscore_metric.py

create mode 100644 OCRBench_v2/eval_scripts/TEDS_metric.py

create mode 100644 OCRBench_v2/eval_scripts/__pycache__/IoUscore_metric.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/__pycache__/TEDS_metric.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/__pycache__/page_ocr_metric.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/__pycache__/parallel.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/__pycache__/spotting_metric.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/__pycache__/vqa_metric.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/eval.py

create mode 100644 OCRBench_v2/eval_scripts/get_score.py

create mode 100644 OCRBench_v2/eval_scripts/page_ocr_metric.py

create mode 100644 OCRBench_v2/eval_scripts/parallel.py

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/__init__.py

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/__pycache__/__init__.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/__pycache__/__init__.cpython-39.pyc

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/__pycache__/rrc_evaluation_funcs_1_1.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/__pycache__/rrc_evaluation_funcs_1_1.cpython-39.pyc

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/__pycache__/script.cpython-310.pyc

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/__pycache__/script.cpython-39.pyc

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/gt.zip

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/gt/gt_img_0.txt

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/readme.txt

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/results.zip

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/rrc_evaluation_funcs_1_1.py

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/script.py

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/script_test_ch4_t4_e1-1577983164.zip

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/submit.zip

create mode 100644 OCRBench_v2/eval_scripts/spotting_eval/submit/res_img_0.txt

create mode 100644 OCRBench_v2/eval_scripts/spotting_metric.py

create mode 100644 OCRBench_v2/eval_scripts/vqa_metric.py

create mode 100644 OCRBench_v2/pred_folder/internvl2_5_26b.json

create mode 100644 OCRBench_v2/requirements.txt

diff --git a/OCRBench/README.md b/OCRBench/README.md

new file mode 100644

index 0000000..440308c

--- /dev/null

+++ b/OCRBench/README.md

@@ -0,0 +1,58 @@

+# OCRBench: On the Hidden Mystery of OCR in Large Multimodal Models

+ +

+> Large models have recently played a dominant role in natural language processing and multimodal vision-language learning. However, their effectiveness in text-related visual tasks remains relatively unexplored. In this paper, we conducted a comprehensive evaluation of Large Multimodal Models, such as GPT4V and Gemini, in various text-related visual tasks including Text Recognition, Scene Text-Centric Visual Question Answering (VQA), Document-Oriented VQA, Key Information Extraction (KIE), and Handwritten Mathematical Expression Recognition (HMER). To facilitate the assessment of Optical Character Recognition (OCR) capabilities in Large Multimodal Models, we propose OCRBench, a comprehensive evaluation benchmark. Our study encompasses 29 datasets, making it the most comprehensive OCR evaluation benchmark available. Furthermore, our study reveals both the strengths and weaknesses of these models, particularly in handling multilingual text, handwritten text, non-semantic text, and mathematical expression recognition. Most importantly, the baseline results showcased in this study could provide a foundational framework for the conception and assessment of innovative strategies targeted at enhancing zero-shot multimodal techniques.

+

+**[Project Page [This Page]](https://github.com/Yuliang-Liu/MultimodalOCR)** | **[Paper](https://arxiv.org/abs/2305.07895)** |**[OCRBench Leaderboard](https://huggingface.co/spaces/echo840/ocrbench-leaderboard)**|**[Opencompass Leaderboard](https://rank.opencompass.org.cn/leaderboard-multimodal)**|

+

+

+# Data

+| Data | Link | Description |

+| --- | --- | --- |

+| Full Test Json | [Full Test](./json_files/FullTest.json) | This file contains the test data used in Table 1 and Table 2 from [Paper](https://arxiv.org/abs/2305.07895). |

+| OCRBench Json | [OCRBench](./json_files/OCRBench.json) | This file contains the test data in OCRBench used in Table3 from [Paper](https://arxiv.org/abs/2305.07895). |

+| All Test Images |[All Images](https://drive.google.com/file/d/1U5AtLoJ7FrJe9yfcbssfeLmlKb7dTosc/view?usp=drive_link) | This file contains all the testing images used in [Paper](https://arxiv.org/abs/2305.07895), including OCRBench Images.|

+| OCRBench Images | [OCRBench Images](https://drive.google.com/file/d/1a3VRJx3V3SdOmPr7499Ky0Ug8AwqGUHO/view?usp=drive_link) | This file only contains the images used in OCRBench. |

+| Test Results | [Test Results](https://drive.google.com/drive/folders/15XlHCuNTavI1Ihqm4G7u3J34BHpkaqyE?usp=drive_link) | This file file contains the result files for the test models. |

+

+

+# OCRBench

+

+OCRBench is a comprehensive evaluation benchmark designed to assess the OCR capabilities of Large Multimodal Models. It comprises five components: Text Recognition, SceneText-Centric VQA, Document-Oriented VQA, Key Information Extraction, and Handwritten Mathematical Expression Recognition. The benchmark includes 1000 question-answer pairs, and all the answers undergo manual verification and correction to ensure a more precise evaluation.

+

+You can find the results of Large Multimodal Models in **[OCRBench Leaderboard](https://huggingface.co/spaces/echo840/ocrbench-leaderboard)**, if you would like to include your model in the OCRBench leaderboard, please follow the evaluation instructions provided below and feel free to contact us via email at zhangli123@hust.edu.cn. We will update the leaderboard in time.

+

+

+

+> Large models have recently played a dominant role in natural language processing and multimodal vision-language learning. However, their effectiveness in text-related visual tasks remains relatively unexplored. In this paper, we conducted a comprehensive evaluation of Large Multimodal Models, such as GPT4V and Gemini, in various text-related visual tasks including Text Recognition, Scene Text-Centric Visual Question Answering (VQA), Document-Oriented VQA, Key Information Extraction (KIE), and Handwritten Mathematical Expression Recognition (HMER). To facilitate the assessment of Optical Character Recognition (OCR) capabilities in Large Multimodal Models, we propose OCRBench, a comprehensive evaluation benchmark. Our study encompasses 29 datasets, making it the most comprehensive OCR evaluation benchmark available. Furthermore, our study reveals both the strengths and weaknesses of these models, particularly in handling multilingual text, handwritten text, non-semantic text, and mathematical expression recognition. Most importantly, the baseline results showcased in this study could provide a foundational framework for the conception and assessment of innovative strategies targeted at enhancing zero-shot multimodal techniques.

+

+**[Project Page [This Page]](https://github.com/Yuliang-Liu/MultimodalOCR)** | **[Paper](https://arxiv.org/abs/2305.07895)** |**[OCRBench Leaderboard](https://huggingface.co/spaces/echo840/ocrbench-leaderboard)**|**[Opencompass Leaderboard](https://rank.opencompass.org.cn/leaderboard-multimodal)**|

+

+

+# Data

+| Data | Link | Description |

+| --- | --- | --- |

+| Full Test Json | [Full Test](./json_files/FullTest.json) | This file contains the test data used in Table 1 and Table 2 from [Paper](https://arxiv.org/abs/2305.07895). |

+| OCRBench Json | [OCRBench](./json_files/OCRBench.json) | This file contains the test data in OCRBench used in Table3 from [Paper](https://arxiv.org/abs/2305.07895). |

+| All Test Images |[All Images](https://drive.google.com/file/d/1U5AtLoJ7FrJe9yfcbssfeLmlKb7dTosc/view?usp=drive_link) | This file contains all the testing images used in [Paper](https://arxiv.org/abs/2305.07895), including OCRBench Images.|

+| OCRBench Images | [OCRBench Images](https://drive.google.com/file/d/1a3VRJx3V3SdOmPr7499Ky0Ug8AwqGUHO/view?usp=drive_link) | This file only contains the images used in OCRBench. |

+| Test Results | [Test Results](https://drive.google.com/drive/folders/15XlHCuNTavI1Ihqm4G7u3J34BHpkaqyE?usp=drive_link) | This file file contains the result files for the test models. |

+

+

+# OCRBench

+

+OCRBench is a comprehensive evaluation benchmark designed to assess the OCR capabilities of Large Multimodal Models. It comprises five components: Text Recognition, SceneText-Centric VQA, Document-Oriented VQA, Key Information Extraction, and Handwritten Mathematical Expression Recognition. The benchmark includes 1000 question-answer pairs, and all the answers undergo manual verification and correction to ensure a more precise evaluation.

+

+You can find the results of Large Multimodal Models in **[OCRBench Leaderboard](https://huggingface.co/spaces/echo840/ocrbench-leaderboard)**, if you would like to include your model in the OCRBench leaderboard, please follow the evaluation instructions provided below and feel free to contact us via email at zhangli123@hust.edu.cn. We will update the leaderboard in time.

+

+ +

+# Evaluation

+The test code for evaluating models in the paper can be found in [scripts](./scripts). Before conducting the evaluation, you need to configure the model weights and environment based on the official code link provided in the scripts. If you want to evaluate other models, please edit the "TODO" things in [example](./example.py).

+

+You can also use [VLMEvalKit](https://github.com/open-compass/VLMEvalKit) and [lmms-eval](https://github.com/EvolvingLMMs-Lab/lmms-eval) for evaluation.

+

+Example evaluation scripts:

+```python

+

+python ./scripts/monkey.py --image_folder ./OCRBench_Images --OCRBench_file ./OCRBench/OCRBench.json --save_name Monkey_OCRBench --num_workers GPU_Nums # Test on OCRBench

+python ./scripts/monkey.py --image_folder ./OCRBench_Images --OCRBench_file ./OCRBench/FullTest.json --save_name Monkey_FullTest --num_workers GPU_Nums # Full Test

+

+```

+

+# Citation

+If you wish to refer to the baseline results published here, please use the following BibTeX entries:

+```BibTeX

+@article{Liu_2024,

+ title={OCRBench: on the hidden mystery of OCR in large multimodal models},

+ volume={67},

+ ISSN={1869-1919},

+ url={http://dx.doi.org/10.1007/s11432-024-4235-6},

+ DOI={10.1007/s11432-024-4235-6},

+ number={12},

+ journal={Science China Information Sciences},

+ publisher={Springer Science and Business Media LLC},

+ author={Liu, Yuliang and Li, Zhang and Huang, Mingxin and Yang, Biao and Yu, Wenwen and Li, Chunyuan and Yin, Xu-Cheng and Liu, Cheng-Lin and Jin, Lianwen and Bai, Xiang},

+ year={2024},

+ month=dec }

+```

+

+

+

diff --git a/example.py b/OCRBench/example.py

similarity index 100%

rename from example.py

rename to OCRBench/example.py

diff --git a/images/GPT4V_Gemini.png b/OCRBench/images/GPT4V_Gemini.png

similarity index 100%

rename from images/GPT4V_Gemini.png

rename to OCRBench/images/GPT4V_Gemini.png

diff --git a/images/all_data.png b/OCRBench/images/all_data.png

similarity index 100%

rename from images/all_data.png

rename to OCRBench/images/all_data.png

diff --git a/OCRBench/FullTest.json b/OCRBench/json_files/FullTest.json

similarity index 100%

rename from OCRBench/FullTest.json

rename to OCRBench/json_files/FullTest.json

diff --git a/OCRBench/OCRBench.json b/OCRBench/json_files/OCRBench.json

similarity index 100%

rename from OCRBench/OCRBench.json

rename to OCRBench/json_files/OCRBench.json

diff --git a/scripts/GPT4V.py b/OCRBench/scripts/GPT4V.py

similarity index 100%

rename from scripts/GPT4V.py

rename to OCRBench/scripts/GPT4V.py

diff --git a/scripts/Genimi.py b/OCRBench/scripts/Genimi.py

similarity index 100%

rename from scripts/Genimi.py

rename to OCRBench/scripts/Genimi.py

diff --git a/scripts/LLaVA1_5.py b/OCRBench/scripts/LLaVA1_5.py

similarity index 100%

rename from scripts/LLaVA1_5.py

rename to OCRBench/scripts/LLaVA1_5.py

diff --git a/scripts/MiniMonkey.py b/OCRBench/scripts/MiniMonkey.py

similarity index 100%

rename from scripts/MiniMonkey.py

rename to OCRBench/scripts/MiniMonkey.py

diff --git a/scripts/blip2.py b/OCRBench/scripts/blip2.py

similarity index 100%

rename from scripts/blip2.py

rename to OCRBench/scripts/blip2.py

diff --git a/scripts/blip2_vicuna_instruct.py b/OCRBench/scripts/blip2_vicuna_instruct.py

similarity index 100%

rename from scripts/blip2_vicuna_instruct.py

rename to OCRBench/scripts/blip2_vicuna_instruct.py

diff --git a/scripts/bliva.py b/OCRBench/scripts/bliva.py

similarity index 100%

rename from scripts/bliva.py

rename to OCRBench/scripts/bliva.py

diff --git a/scripts/interlm.py b/OCRBench/scripts/interlm.py

similarity index 100%

rename from scripts/interlm.py

rename to OCRBench/scripts/interlm.py

diff --git a/scripts/interlm2.py b/OCRBench/scripts/interlm2.py

similarity index 100%

rename from scripts/interlm2.py

rename to OCRBench/scripts/interlm2.py

diff --git a/scripts/internvl2_s b/OCRBench/scripts/internvl2_s

similarity index 100%

rename from scripts/internvl2_s

rename to OCRBench/scripts/internvl2_s

diff --git a/scripts/intervl.py b/OCRBench/scripts/intervl.py

similarity index 100%

rename from scripts/intervl.py

rename to OCRBench/scripts/intervl.py

diff --git a/scripts/llavar.py b/OCRBench/scripts/llavar.py

similarity index 100%

rename from scripts/llavar.py

rename to OCRBench/scripts/llavar.py

diff --git a/scripts/mPLUG-DocOwl15.py b/OCRBench/scripts/mPLUG-DocOwl15.py

similarity index 100%

rename from scripts/mPLUG-DocOwl15.py

rename to OCRBench/scripts/mPLUG-DocOwl15.py

diff --git a/scripts/mPLUG-owl.py b/OCRBench/scripts/mPLUG-owl.py

similarity index 100%

rename from scripts/mPLUG-owl.py

rename to OCRBench/scripts/mPLUG-owl.py

diff --git a/scripts/mPLUG-owl2.py b/OCRBench/scripts/mPLUG-owl2.py

similarity index 100%

rename from scripts/mPLUG-owl2.py

rename to OCRBench/scripts/mPLUG-owl2.py

diff --git a/scripts/minigpt4v2.py b/OCRBench/scripts/minigpt4v2.py

similarity index 100%

rename from scripts/minigpt4v2.py

rename to OCRBench/scripts/minigpt4v2.py

diff --git a/scripts/monkey.py b/OCRBench/scripts/monkey.py

similarity index 100%

rename from scripts/monkey.py

rename to OCRBench/scripts/monkey.py

diff --git a/scripts/qwenvl.py b/OCRBench/scripts/qwenvl.py

similarity index 100%

rename from scripts/qwenvl.py

rename to OCRBench/scripts/qwenvl.py

diff --git a/scripts/qwenvl_api.py b/OCRBench/scripts/qwenvl_api.py

similarity index 100%

rename from scripts/qwenvl_api.py

rename to OCRBench/scripts/qwenvl_api.py

diff --git a/OCRBench_v2/README.md b/OCRBench_v2/README.md

new file mode 100644

index 0000000..f4bd189

--- /dev/null

+++ b/OCRBench_v2/README.md

@@ -0,0 +1,84 @@

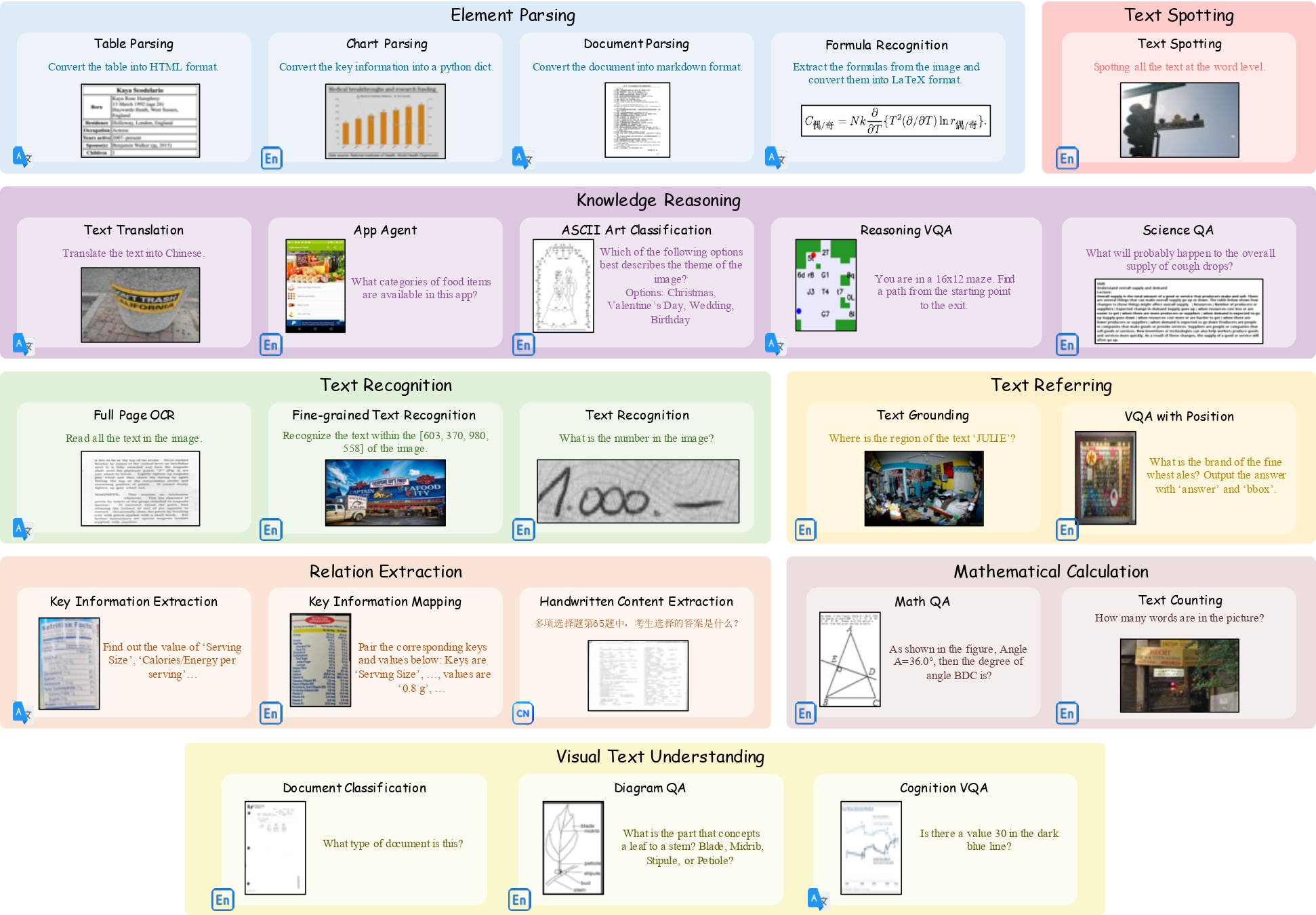

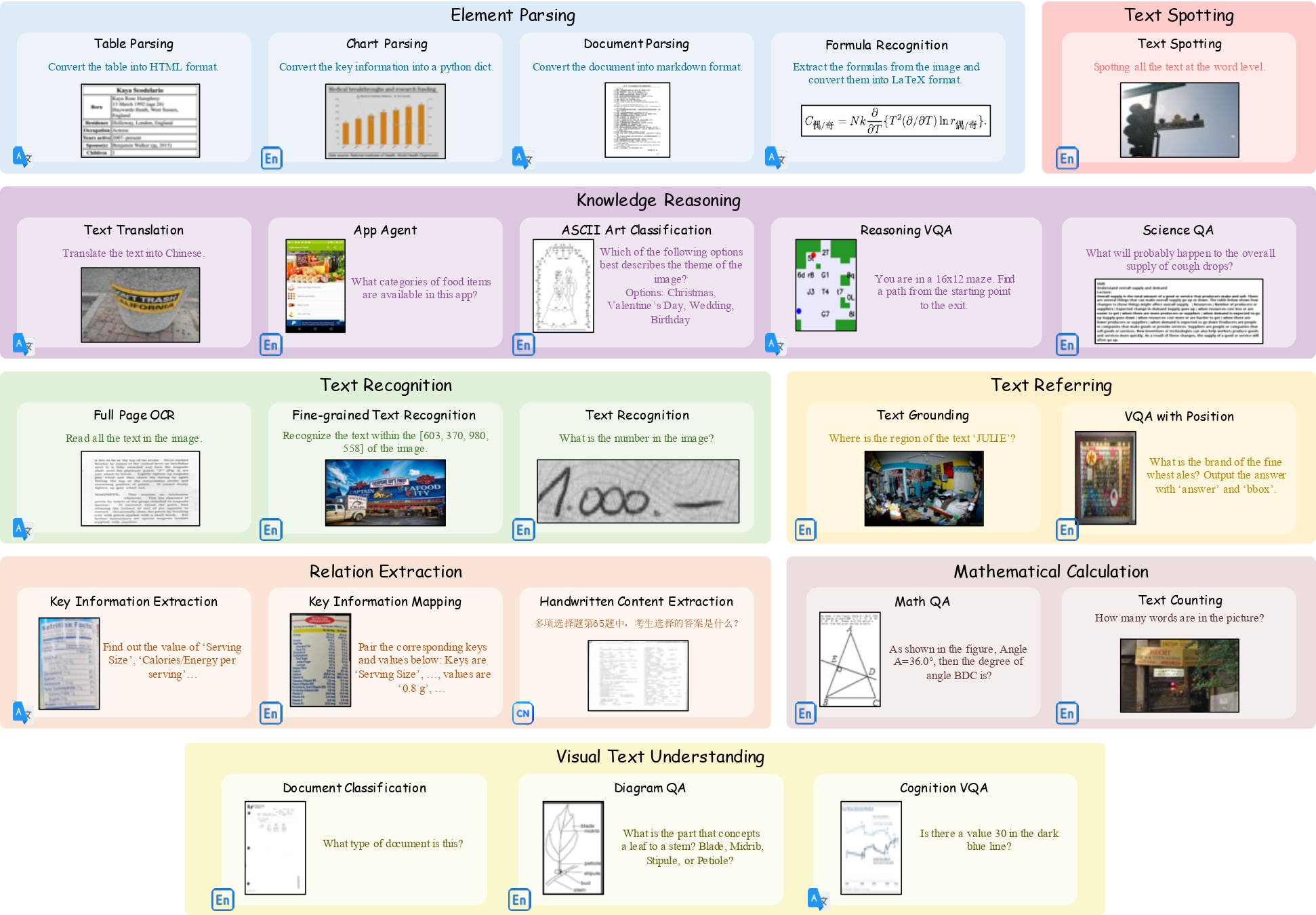

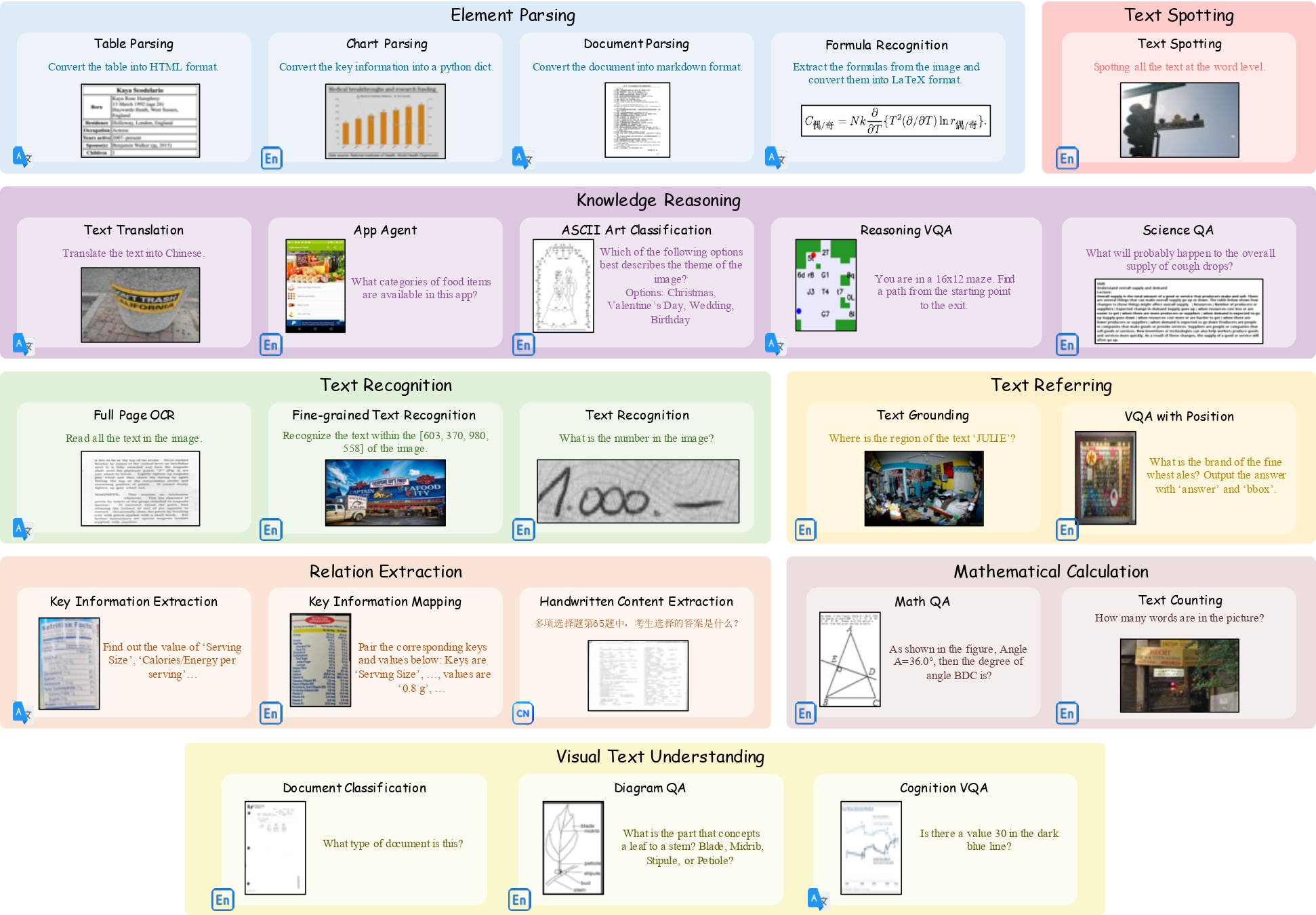

+# OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning

+

+> Scoring the Optical Character Recognition (OCR) capabilities of Large Multimodal Models (LMMs) has witnessed growing interest recently. Existing benchmarks have highlighted the impressive performance of LMMs in text recognition; however, their abilities in certain challenging tasks, such as text localization, handwritten content extraction, and logical reasoning, remain underexplored. To bridge this gap, we introduce OCRBench v2, a large-scale bilingual text-centric benchmark with currently the most comprehensive set of tasks (4X more tasks than the previous multi-scene benchmark OCRBench), the widest coverage of scenarios (31 diverse scenarios including street scene, receipt, formula, diagram, and so on), and thorough evaluation metrics, with a total of 10,000 human-verified question-answering pairs and a high proportion of difficult samples. After carefully benchmarking state-of-the-art LMMs on OCRBench v2, we find that 36 out of 38 LMMs score below 50 (100 in total) and suffer from five-type limitations, including less frequently encountered text recognition, fine-grained perception, layout perception, complex element parsing, and logical reasoning.

+

+**[Project Page](https://github.com/Yuliang-Liu/MultimodalOCR)** | **Paper(Coming soon)** | **[OCRBench Leaderboard](https://huggingface.co/spaces/ling99/OCRBench-v2-leaderboard)**

+

+

+

+# Evaluation

+The test code for evaluating models in the paper can be found in [scripts](./scripts). Before conducting the evaluation, you need to configure the model weights and environment based on the official code link provided in the scripts. If you want to evaluate other models, please edit the "TODO" things in [example](./example.py).

+

+You can also use [VLMEvalKit](https://github.com/open-compass/VLMEvalKit) and [lmms-eval](https://github.com/EvolvingLMMs-Lab/lmms-eval) for evaluation.

+

+Example evaluation scripts:

+```python

+

+python ./scripts/monkey.py --image_folder ./OCRBench_Images --OCRBench_file ./OCRBench/OCRBench.json --save_name Monkey_OCRBench --num_workers GPU_Nums # Test on OCRBench

+python ./scripts/monkey.py --image_folder ./OCRBench_Images --OCRBench_file ./OCRBench/FullTest.json --save_name Monkey_FullTest --num_workers GPU_Nums # Full Test

+

+```

+

+# Citation

+If you wish to refer to the baseline results published here, please use the following BibTeX entries:

+```BibTeX

+@article{Liu_2024,

+ title={OCRBench: on the hidden mystery of OCR in large multimodal models},

+ volume={67},

+ ISSN={1869-1919},

+ url={http://dx.doi.org/10.1007/s11432-024-4235-6},

+ DOI={10.1007/s11432-024-4235-6},

+ number={12},

+ journal={Science China Information Sciences},

+ publisher={Springer Science and Business Media LLC},

+ author={Liu, Yuliang and Li, Zhang and Huang, Mingxin and Yang, Biao and Yu, Wenwen and Li, Chunyuan and Yin, Xu-Cheng and Liu, Cheng-Lin and Jin, Lianwen and Bai, Xiang},

+ year={2024},

+ month=dec }

+```

+

+

+

diff --git a/example.py b/OCRBench/example.py

similarity index 100%

rename from example.py

rename to OCRBench/example.py

diff --git a/images/GPT4V_Gemini.png b/OCRBench/images/GPT4V_Gemini.png

similarity index 100%

rename from images/GPT4V_Gemini.png

rename to OCRBench/images/GPT4V_Gemini.png

diff --git a/images/all_data.png b/OCRBench/images/all_data.png

similarity index 100%

rename from images/all_data.png

rename to OCRBench/images/all_data.png

diff --git a/OCRBench/FullTest.json b/OCRBench/json_files/FullTest.json

similarity index 100%

rename from OCRBench/FullTest.json

rename to OCRBench/json_files/FullTest.json

diff --git a/OCRBench/OCRBench.json b/OCRBench/json_files/OCRBench.json

similarity index 100%

rename from OCRBench/OCRBench.json

rename to OCRBench/json_files/OCRBench.json

diff --git a/scripts/GPT4V.py b/OCRBench/scripts/GPT4V.py

similarity index 100%

rename from scripts/GPT4V.py

rename to OCRBench/scripts/GPT4V.py

diff --git a/scripts/Genimi.py b/OCRBench/scripts/Genimi.py

similarity index 100%

rename from scripts/Genimi.py

rename to OCRBench/scripts/Genimi.py

diff --git a/scripts/LLaVA1_5.py b/OCRBench/scripts/LLaVA1_5.py

similarity index 100%

rename from scripts/LLaVA1_5.py

rename to OCRBench/scripts/LLaVA1_5.py

diff --git a/scripts/MiniMonkey.py b/OCRBench/scripts/MiniMonkey.py

similarity index 100%

rename from scripts/MiniMonkey.py

rename to OCRBench/scripts/MiniMonkey.py

diff --git a/scripts/blip2.py b/OCRBench/scripts/blip2.py

similarity index 100%

rename from scripts/blip2.py

rename to OCRBench/scripts/blip2.py

diff --git a/scripts/blip2_vicuna_instruct.py b/OCRBench/scripts/blip2_vicuna_instruct.py

similarity index 100%

rename from scripts/blip2_vicuna_instruct.py

rename to OCRBench/scripts/blip2_vicuna_instruct.py

diff --git a/scripts/bliva.py b/OCRBench/scripts/bliva.py

similarity index 100%

rename from scripts/bliva.py

rename to OCRBench/scripts/bliva.py

diff --git a/scripts/interlm.py b/OCRBench/scripts/interlm.py

similarity index 100%

rename from scripts/interlm.py

rename to OCRBench/scripts/interlm.py

diff --git a/scripts/interlm2.py b/OCRBench/scripts/interlm2.py

similarity index 100%

rename from scripts/interlm2.py

rename to OCRBench/scripts/interlm2.py

diff --git a/scripts/internvl2_s b/OCRBench/scripts/internvl2_s

similarity index 100%

rename from scripts/internvl2_s

rename to OCRBench/scripts/internvl2_s

diff --git a/scripts/intervl.py b/OCRBench/scripts/intervl.py

similarity index 100%

rename from scripts/intervl.py

rename to OCRBench/scripts/intervl.py

diff --git a/scripts/llavar.py b/OCRBench/scripts/llavar.py

similarity index 100%

rename from scripts/llavar.py

rename to OCRBench/scripts/llavar.py

diff --git a/scripts/mPLUG-DocOwl15.py b/OCRBench/scripts/mPLUG-DocOwl15.py

similarity index 100%

rename from scripts/mPLUG-DocOwl15.py

rename to OCRBench/scripts/mPLUG-DocOwl15.py

diff --git a/scripts/mPLUG-owl.py b/OCRBench/scripts/mPLUG-owl.py

similarity index 100%

rename from scripts/mPLUG-owl.py

rename to OCRBench/scripts/mPLUG-owl.py

diff --git a/scripts/mPLUG-owl2.py b/OCRBench/scripts/mPLUG-owl2.py

similarity index 100%

rename from scripts/mPLUG-owl2.py

rename to OCRBench/scripts/mPLUG-owl2.py

diff --git a/scripts/minigpt4v2.py b/OCRBench/scripts/minigpt4v2.py

similarity index 100%

rename from scripts/minigpt4v2.py

rename to OCRBench/scripts/minigpt4v2.py

diff --git a/scripts/monkey.py b/OCRBench/scripts/monkey.py

similarity index 100%

rename from scripts/monkey.py

rename to OCRBench/scripts/monkey.py

diff --git a/scripts/qwenvl.py b/OCRBench/scripts/qwenvl.py

similarity index 100%

rename from scripts/qwenvl.py

rename to OCRBench/scripts/qwenvl.py

diff --git a/scripts/qwenvl_api.py b/OCRBench/scripts/qwenvl_api.py

similarity index 100%

rename from scripts/qwenvl_api.py

rename to OCRBench/scripts/qwenvl_api.py

diff --git a/OCRBench_v2/README.md b/OCRBench_v2/README.md

new file mode 100644

index 0000000..f4bd189

--- /dev/null

+++ b/OCRBench_v2/README.md

@@ -0,0 +1,84 @@

+# OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning

+

+> Scoring the Optical Character Recognition (OCR) capabilities of Large Multimodal Models (LMMs) has witnessed growing interest recently. Existing benchmarks have highlighted the impressive performance of LMMs in text recognition; however, their abilities in certain challenging tasks, such as text localization, handwritten content extraction, and logical reasoning, remain underexplored. To bridge this gap, we introduce OCRBench v2, a large-scale bilingual text-centric benchmark with currently the most comprehensive set of tasks (4X more tasks than the previous multi-scene benchmark OCRBench), the widest coverage of scenarios (31 diverse scenarios including street scene, receipt, formula, diagram, and so on), and thorough evaluation metrics, with a total of 10,000 human-verified question-answering pairs and a high proportion of difficult samples. After carefully benchmarking state-of-the-art LMMs on OCRBench v2, we find that 36 out of 38 LMMs score below 50 (100 in total) and suffer from five-type limitations, including less frequently encountered text recognition, fine-grained perception, layout perception, complex element parsing, and logical reasoning.

+

+**[Project Page](https://github.com/Yuliang-Liu/MultimodalOCR)** | **Paper(Coming soon)** | **[OCRBench Leaderboard](https://huggingface.co/spaces/ling99/OCRBench-v2-leaderboard)**

+

+

+  +

+

+

+# Data

+You can download OCRBench v2 from [Google Drive](https://drive.google.com/file/d/1Hk1TMu--7nr5vJ7iaNwMQZ_Iw9W_KI3C/view?usp=sharing)

+After downloading and extracting the dataset, the directory structure is as follows:

+```

+OCRBench_v2/

+├── EN_part/

+├── CN_part/

+├── OCRBench_v2.json

+```

+# Evaluation

+

+## Environment

+All Python dependencies required for the evaluation process are specified in the **requirements.txt**.

+To set up the environment, simply run the following commands in the project directory:

+```python

+conda create -n ocrbench_v2 python==3.10 -y

+conda activate ocrbench_v2

+pip install -r requirements.txt

+```

+

+## Inference

+To evaluate the model's performance on OCRBench v2, please save the model's inference results in the JSON file within the `predict` field.

+

+Example structure of the JSON file:

+

+```json

+{

+ [

+ "dataset_name": "xx",

+ "type": "xx",

+ "id": 0,

+ "image_path": "xx",

+ "question": "xx",

+ "answers": [

+ "xx"

+ ],

+ "predict": "xx"

+ ]

+ ...

+}

+```

+

+## Evaluation Scripts

+After obtaining the inference results of the model, you can see use the bellow scripts to obtain the final score of OCRBench v2. `./pred_folder/internvl2_5_26b.json` is an example inference result of InternVL2.5-26B with [VLMEvalKit](https://github.com/open-compass/VLMEvalKit). You can use `./eval_scripts/eval.py` to get the score for each samples, and the results are saved under `./res_folder`.

+

+```python

+python ./eval_scripts/eval.py --input_path ./pred_folder/internvl2_5_26b.json --output_path ./res_folder/internvl2_5_26b.json

+```

+

+After obtaining the scores for all samples, you can use `./eval_scripts/get_score.py` to get the metrics for OCRBench v2.

+

+```python

+python ./eval_scripts/get_score.py --json_file ./res_folder/internvl2_5_26b.json

+```

+

+# Leaderboard

+

+## Performance of LMMs on English subsets

+

+

+  +

+

+

+## Performance of LMMs on English subsets

+

+

+  +

+

+

+# Copyright Statement

+The data are collected from public datasets and community user contributions. This dataset is for research purposes only and not for commercial use. If there are any copyright concerns, please contact ling_fu@hust.edu.cn.

+

+# Citation

+Coming soon

diff --git a/OCRBench_v2/eval_scripts/IoUscore_metric.py b/OCRBench_v2/eval_scripts/IoUscore_metric.py

new file mode 100644

index 0000000..6af265e

--- /dev/null

+++ b/OCRBench_v2/eval_scripts/IoUscore_metric.py

@@ -0,0 +1,91 @@

+import os

+import re

+import ast

+import ipdb

+from vqa_metric import vqa_evaluation

+

+

+def calculate_iou(box1, box2):

+

+ try:

+ box1 = [int(coordinate) for coordinate in box1]

+ box2 = [int(coordinate) for coordinate in box2]

+ except:

+ return 0

+

+ x1_inter = max(box1[0], box2[0])

+ y1_inter = max(box1[1], box2[1])

+ x2_inter = min(box1[2], box2[2])

+ y2_inter = min(box1[3], box2[3])

+

+ inter_area = max(0, x2_inter - x1_inter) * max(0, y2_inter - y1_inter)

+

+ box1_area = (box1[2] - box1[0]) * (box1[3] - box1[1])

+ box2_area = (box2[2] - box2[0]) * (box2[3] - box2[1])

+

+ union_area = box1_area + box2_area - inter_area

+

+ iou = inter_area / union_area if union_area != 0 else 0

+

+ return iou

+

+

+def vqa_with_position_evaluation(predict, img_metas):

+

+ score_content, score_bbox = .0, .0

+ if "answer" in predict.keys():

+ score_content = vqa_evaluation(predict["answer"], img_metas["answers"])

+ if "bbox" in predict.keys():

+ gt_bbox = img_metas["bbox"]

+ try:

+ predict_bbox_list = ast.literal_eval(predict["bbox"])

+ score_bbox = calculate_iou(predict_bbox_list, gt_bbox)

+ except:

+ score_bbox = 0

+ return 0.5 * score_content + 0.5 * score_bbox

+

+

+def extract_coordinates(text):

+ # Regex pattern to match coordinates in either (x1, y1, x2, y2) or [x1, y1, x2, y2] format

+

+ pattern = r'[\(\[]\s*(\d+)\s*,\s*(\d+)\s*,\s*(\d+)\s*,\s*(\d+)\s*[\)\]]'

+

+ matches = list(re.finditer(pattern, text))

+ coords_list = []

+ coords_set = set()

+ for match in matches:

+

+ x1, y1, x2, y2 = map(int, match.groups())

+

+ if all(0 <= n <= 1000 for n in [x1, y1, x2, y2]):

+ coords = (x1, y1, x2, y2)

+

+ if coords in coords_set:

+ coords_list = [c for c in coords_list if c != coords]

+

+ coords_list.append(coords)

+ coords_set.add(coords)

+ if coords_list:

+ last_coords = coords_list[-1]

+ return list(last_coords)

+ else:

+ return None

+

+

+if __name__ == "__main__":

+

+ print("Example for Text Grounding task.")

+ box1 = [50, 50, 150, 150]

+ box2 = [60, 60, 140, 140]

+ iou_score = calculate_iou(box1, box2)

+ print(f"IoU score: {iou_score}")

+

+ print("Example for VQA with position task.")

+ pred = {"content": "The content is Hello Buddies", "bbox": box1}

+ gt = {"content": "Hello Buddies", "bbox": box2}

+

+ vqa_score = vqa_evaluation(pred["content"], gt["content"])

+ iou_score = calculate_iou(pred["bbox"], gt["bbox"])

+

+ print(f"VQA score: {vqa_score}")

+ print(f"IoU score: {iou_score}")

diff --git a/OCRBench_v2/eval_scripts/TEDS_metric.py b/OCRBench_v2/eval_scripts/TEDS_metric.py

new file mode 100644

index 0000000..7e7ccf6

--- /dev/null

+++ b/OCRBench_v2/eval_scripts/TEDS_metric.py

@@ -0,0 +1,931 @@

+# Copyright 2020 IBM

+# Author: peter.zhong@au1.ibm.com

+#

+# This is free software; you can redistribute it and/or modify

+# it under the terms of the Apache 2.0 License.

+#

+# This software is distributed in the hope that it will be useful,

+# but WITHOUT ANY WARRANTY; without even the implied warranty of

+# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

+# Apache 2.0 License for more details.

+

+import re

+import ast

+import json

+import ipdb

+import distance

+from apted import APTED, Config

+from itertools import product

+from apted.helpers import Tree

+from lxml import etree, html

+from collections import deque

+from parallel import parallel_process

+from tqdm import tqdm

+from zss import simple_distance, Node

+import string

+from typing import Any, Callable, Optional, Sequence

+import numpy as np

+import Levenshtein

+import editdistance

+

+

+class TableTree(Tree):

+ def __init__(self, tag, colspan=None, rowspan=None, content=None, *children):

+ self.tag = tag

+ self.colspan = colspan

+ self.rowspan = rowspan

+ self.content = content

+ self.children = list(children)

+

+ def bracket(self):

+ """Show tree using brackets notation"""

+ if self.tag == 'td':

+ result = '"tag": %s, "colspan": %d, "rowspan": %d, "text": %s' % \

+ (self.tag, self.colspan, self.rowspan, self.content)

+ else:

+ result = '"tag": %s' % self.tag

+ for child in self.children:

+ result += child.bracket()

+ return "{{{}}}".format(result)

+

+

+class CustomConfig(Config):

+ @staticmethod

+ def maximum(*sequences):

+ """Get maximum possible value

+ """

+ return max(map(len, sequences))

+

+ def normalized_distance(self, *sequences):

+ """Get distance from 0 to 1

+ """

+ return float(distance.levenshtein(*sequences)) / self.maximum(*sequences)

+

+ def rename(self, node1, node2):

+ """Compares attributes of trees"""

+ if (node1.tag != node2.tag) or (node1.colspan != node2.colspan) or (node1.rowspan != node2.rowspan):

+ return 1.

+ if node1.tag == 'td':

+ if node1.content or node2.content:

+ return self.normalized_distance(node1.content, node2.content)

+ return 0.

+

+

+class TEDS(object):

+ ''' Tree Edit Distance basead Similarity

+ '''

+ def __init__(self, structure_only=False, n_jobs=1, ignore_nodes=None):

+ assert isinstance(n_jobs, int) and (n_jobs >= 1), 'n_jobs must be an integer greather than 1'

+ self.structure_only = structure_only

+ self.n_jobs = n_jobs

+ self.ignore_nodes = ignore_nodes

+ self.__tokens__ = []

+

+ def tokenize(self, node):

+ ''' Tokenizes table cells

+ '''

+ self.__tokens__.append('<%s>' % node.tag)

+ if node.text is not None:

+ self.__tokens__ += list(node.text)

+ for n in node.getchildren():

+ self.tokenize(n)

+ if node.tag != 'unk':

+ self.__tokens__.append('' % node.tag)

+ if node.tag != 'td' and node.tail is not None:

+ self.__tokens__ += list(node.tail)

+

+ def load_html_tree(self, node, parent=None):

+ ''' Converts HTML tree to the format required by apted

+ '''

+ global __tokens__

+ if node.tag == 'td':

+ if self.structure_only:

+ cell = []

+ else:

+ self.__tokens__ = []

+ self.tokenize(node)

+ cell = self.__tokens__[1:-1].copy()

+ new_node = TableTree(node.tag,

+ int(node.attrib.get('colspan', '1')),

+ int(node.attrib.get('rowspan', '1')),

+ cell, *deque())

+ else:

+ new_node = TableTree(node.tag, None, None, None, *deque())

+ if parent is not None:

+ parent.children.append(new_node)

+ if node.tag != 'td':

+ for n in node.getchildren():

+ self.load_html_tree(n, new_node)

+ if parent is None:

+ return new_node

+

+ def evaluate(self, pred, true):

+ ''' Computes TEDS score between the prediction and the ground truth of a

+ given sample

+ '''

+ if (not pred) or (not true):

+ return 0.0

+ parser = html.HTMLParser(remove_comments=True, encoding='utf-8')

+ pred = html.fromstring(pred, parser=parser)

+ true = html.fromstring(true, parser=parser)

+ #print("pred:",pred)

+ #print("true:",true)

+ if pred.xpath('body/table') and true.xpath('body/table'):

+ pred = pred.xpath('body/table')[0]

+ true = true.xpath('body/table')[0]

+ if self.ignore_nodes:

+ etree.strip_tags(pred, *self.ignore_nodes)

+ etree.strip_tags(true, *self.ignore_nodes)

+ n_nodes_pred = len(pred.xpath(".//*"))

+ n_nodes_true = len(true.xpath(".//*"))

+ n_nodes = max(n_nodes_pred, n_nodes_true)

+ tree_pred = self.load_html_tree(pred)

+ tree_true = self.load_html_tree(true)

+ distance = APTED(tree_pred, tree_true, CustomConfig()).compute_edit_distance()

+ return 1.0 - (float(distance) / n_nodes)

+ else:

+ return 0.0

+

+ def batch_evaluate(self, pred_json, true_json):

+ ''' Computes TEDS score between the prediction and the ground truth of

+ a batch of samples

+ @params pred_json: {'FILENAME': 'HTML CODE', ...}

+ @params true_json: {'FILENAME': {'html': 'HTML CODE'}, ...}

+ @output: {'FILENAME': 'TEDS SCORE', ...}

+ '''

+ samples = true_json.keys()

+ if self.n_jobs == 1:

+ scores = [self.evaluate(pred_json.get(filename, ''), true_json[filename]['html']) for filename in tqdm(samples)]

+ else:

+ #inputs = [{'pred': pred_json.get(filename, ''), 'true': true_json[filename]['html']} for filename in samples]

+ inputs = [{'pred': pred_json.get(filename, ''), 'true': true_json[filename]} for filename in samples]

+ scores = parallel_process(inputs, self.evaluate, use_kwargs=True, n_jobs=self.n_jobs, front_num=1)

+ scores = dict(zip(samples, scores))

+ return scores

+

+

+def convert_table_to_html_str(table_row_list=[]):

+ """

+ Given a list of table rows, build the corresponding html string, which is used to compute the TEDS score.

+ We use the official code of PubTabNet to compute TEDS score, it does not consider '

' label.

+ We also remove unneccessary spaces within a table cell and extra '\n' as they will influence the TEDS score.

+ """

+ html_table_str = "" + '\n'

+ for data_row in table_row_list:

+ html_table_str += ""

+ for cell_str in data_row:

+ html_table_str += f"| {cell_str} | "

+ html_table_str += " "

+ html_table_str += '\n'

+ html_table_str += " "

+ html_table_str = html_table_str.replace('\n','')

+ return html_table_str

+

+

+def convert_markdown_table_to_html(markdown_table):

+ """

+ Converts a markdown table to the corresponding html string for TEDS computation.

+ """

+ # remove extra code block tokens like '```markdown' and '```

+ markdown_table = markdown_table.strip('```markdown').strip('```').strip()

+ row_str_list = markdown_table.split('\n')

+ # extra the first header row and other data rows

+ valid_row_str_list = [row_str_list[0]]+row_str_list[2:]

+ table_rows = []

+ for row_str in valid_row_str_list:

+ one_row = []

+ for cell in row_str.strip().split('|')[1:-1]:

+ if set(cell) != set(' '):

+ one_row.append(cell.strip())

+ else:

+ one_row.append(' ')

+ table_rows.append(one_row)

+ # build html string based on table rows

+ html_str = convert_table_to_html_str(table_rows)

+ return html_str

+

+

+def dict_to_html(data):

+ html = "\n"

+ for key, value in data.items():

+ if not isinstance(value, str):

+ value = str(value)

+ value_str = ' '.join(value)

+

+ html += f" | {key} | {value_str} | \n"

+ html += " "

+ return html

+

+

+def convert_str_to_dict(predict_str: str):

+ """

+ Parses the 'predict' string and returns a dictionary.

+ Missing or unparseable content is handled gracefully.

+

+ Parameters:

+ - predict_str (str): The prediction string containing the output dict.

+

+ Returns:

+ - dict: A dictionary extracted from the predict string.

+ """

+ # Remove code fences like ```python\n...\n```

+ code_fence_pattern = r'```(?:python|json)?\n(.*?)\n```'

+ match = re.search(code_fence_pattern, predict_str, re.DOTALL | re.IGNORECASE)

+ if match:

+ content = match.group(1)

+ else:

+ content = predict_str.strip()

+

+ data = {}

+ success = False

+

+ # try parsing with JSON

+ try:

+ data = json.loads(content)

+ success = True

+ except json.JSONDecodeError:

+ pass

+

+ # try parsing with ast.literal_eval

+ if not success:

+ try:

+ data = ast.literal_eval(content)

+ if isinstance(data, dict):

+ success = True

+ except (ValueError, SyntaxError):

+ pass

+

+ # try parsing with regex

+ if not success:

+ key_value_pattern = r'["\']?([\w\s]+)["\']?\s*[:=]\s*["\']?([^\n,"\'{}]+)["\']?'

+ matches = re.findall(key_value_pattern, content)

+ try:

+ for key, value in matches:

+ data[key.strip()] = value.strip()

+ except:

+ return {}

+

+ if not data:

+ return {}

+

+ try:

+ result = {k.strip(): str(v).strip() for k, v in data.items()}

+ except:

+ return {}

+ return result

+

+

+def convert_str_to_multi_dict(predict_str: str):

+ """

+ Parses the 'predict' string and returns a dictionary.

+ Handles nested dictionaries and missing or unparseable content gracefully.

+

+ Parameters:

+ - predict_str (str): The prediction string containing the output dict.

+

+ Returns:

+ - dict: A dictionary extracted from the predict string.

+ """

+ # Remove code fences like ```python\n...\n```

+ code_fence_pattern = r'```(?:python|json)?\n(.*?)\n```'

+ matches = re.findall(code_fence_pattern, predict_str, re.DOTALL | re.IGNORECASE)

+ if matches:

+ content = max(matches, key=len)

+ else:

+ content = predict_str.strip()

+

+ def strip_variable_assignment(s):

+ variable_assignment_pattern = r'^\s*\w+\s*=\s*'

+ return re.sub(variable_assignment_pattern, '', s.strip(), count=1)

+

+ content = strip_variable_assignment(content)

+

+ def remove_comments(s):

+ return re.sub(r'#.*', '', s)

+

+ content = remove_comments(content)

+

+ last_brace_pos = content.rfind('}')

+ if last_brace_pos != -1:

+ content = content[:last_brace_pos+1]

+

+ data = {}

+ success = False

+

+ # try parsing with ast.literal_eval

+ try:

+ data = ast.literal_eval(content)

+ if isinstance(data, dict):

+ success = True

+ except (ValueError, SyntaxError, TypeError):

+ pass

+

+ if not success:

+ return {}

+

+ def process_data(obj):

+ if isinstance(obj, dict):

+ return {k: process_data(v) for k, v in obj.items()}

+ elif isinstance(obj, list):

+ return [process_data(elem) for elem in obj]

+ else:

+ return obj

+

+ data = process_data(data)

+

+ return data

+

+

+def generate_combinations(input_dict):

+ """

+ Function to generate all possible combinations of values from a dictionary.

+ """

+ kie_answer = input_dict

+ if not isinstance(kie_answer, dict):

+ kie_answer = kie_answer.strip('"')

+ try:

+ kie_answer = json.loads(kie_answer)

+ except json.JSONDecodeError:

+ try:

+ kie_answer = ast.literal_eval(kie_answer)

+ if not isinstance(kie_answer, dict):

+ kie_answer = ast.literal_eval(kie_answer)

+ except (ValueError, SyntaxError):

+ print(f"Unable to parse 'answers' field: {kie_answer}")

+ return {}

+

+ # Ensure the parsed result is a dictionary.

+ if not isinstance(kie_answer, dict):

+ print("Parsed 'answers' is still not a dictionary.")

+ raise ValueError("Input could not be parsed into a dictionary.")

+

+ keys = list(kie_answer.keys())

+

+ value_lists = []

+ for single_key in keys:

+ sinlge_value = kie_answer[single_key]

+ if not isinstance(sinlge_value, list):

+ sinlge_value = [sinlge_value]

+ value_lists.append(sinlge_value)

+

+ # Compute the Cartesian product of the value lists.

+ combinations = list(product(*value_lists))

+

+ # Create a dictionary for each combination of values.

+ result = [dict(zip(keys, values)) for values in combinations]

+

+ return result

+

+ else:

+ keys = list(input_dict.keys())

+ value_lists = [input_dict[key] for key in keys]

+

+ # Compute the Cartesian product of the value lists.

+ combinations = list(product(*value_lists))

+

+ # Create a dictionary for each combination of values.

+ result = [dict(zip(keys, values)) for values in combinations]

+

+ return result

+

+

+def compute_f1_score(preds, gts, ignores=[]):

+ """Compute the F1-score for KIE task between predicted and ground truth dictionaries.

+

+ Args:

+ preds (dict): The predicted key-value pairs.

+ gts (dict): The ground truth key-value pairs.

+ ignores (list): The list of keys to ignore during evaluation.

+

+ Returns:

+ dict: A dictionary where keys are field names and values are their corresponding F1-scores.

+ """

+ # Optionally remove ignored keys from predictions and ground truths

+ keys = set(preds.keys()).union(set(gts.keys())) - set(ignores)

+ f1_scores = {}

+

+ for key in keys:

+ pred_value = preds.get(key, None)

+ gt_value = gts.get(key, None)

+

+ if pred_value:

+ pred_value = pred_value.lower().strip().replace("\n"," ").replace(" ", "")

+ if gt_value:

+ gt_value = gt_value.lower().strip().replace("\n"," ").replace(" ", "")

+

+ if pred_value is None and gt_value is None:

+ continue

+ elif pred_value is None:

+ precision = 0.0

+ recall = 0.0

+ elif gt_value is None:

+ # false positive

+ precision = 0.0

+ recall = 0.0

+ else:

+ if pred_value == gt_value:

+ # True positive

+ precision = 1.0

+ recall = 1.0

+ else:

+ precision = 0.0

+ recall = 0.0

+

+ # Compute F1-score

+ f1_score = 2 * precision * recall / (precision + recall) if (precision + recall) > 0 else 0.0

+ f1_scores[key] = f1_score

+

+ if len(f1_scores) == 0:

+ return 0

+ average_f1 = sum(f1_scores.values()) / len(f1_scores)

+

+ return average_f1

+

+

+def pre_clean(text):

+ text = re.sub(r'|||', '', text)

+ text = re.sub(r'\s##(\S)', r'\1', text)

+ text = re.sub(r'\\\s', r'\\', text)

+ text = re.sub(r'\s\*\s\*\s', r'**', text)

+ text = re.sub(r'{\s', r'{', text)

+ text = re.sub(r'\s}', r'}', text)

+ text = re.sub(r'\s}', r'}', text)

+ text = re.sub(r'\\begin\s', r'\\begin', text)

+ text = re.sub(r'\\end\s', r'\\end', text)

+ text = re.sub(r'\\end{table}', r'\\end{table} \n\n', text)

+ text = text.replace('\n', ' ')

+ text = text.replace('*', ' ')

+ text = text.replace('_', ' ')

+ return text

+

+

+def get_tree(input_str):

+ tree = (Node('ROOT').addkid(Node('TITLE')))

+

+ lines = input_str.split("\n")

+ lines = [pre_clean(line) for line in lines]

+ last_title = ''

+ for line in lines:

+ if line.startswith('#'):

+ child = tree.get('ROOT')

+ line = line.replace('#', '')

+ child.addkid(Node(line))

+ last_title = line

+ else:

+ if last_title == '':

+ child = tree.get('TITLE')

+ child.addkid(Node(line))

+ else:

+ child = tree.get(last_title)

+ child.addkid(Node(line))

+ return tree

+

+def STEDS(pred_tree, ref_tree):

+ def my_distance(pred, ref):

+ if len(pred.split()) == 0 or len(ref.split()) == 0:

+ return 1

+ else:

+ return 0

+ total_distance = simple_distance(pred_tree, ref_tree, label_dist=my_distance)

+ num_of_nodes = max(len(list(pred_tree.iter())), len(list(ref_tree.iter())))

+ return 1-total_distance/num_of_nodes

+

+

+def doc_parsing_evaluation(pred, gt):

+ score = 0

+ if not isinstance(pred, str):

+ return 0

+ pred_tree = get_tree(pred)

+ gt_tree = get_tree(gt)

+ score = STEDS(pred_tree, gt_tree)

+

+ return score

+

+

+def wrap_html_table(html_table):

+ """

+ The TEDS computation from PubTabNet code requires that the input html table should have , , and tags.

+ Add them if they are missing.

+ """

+ html_table = html_table.replace('\n','')

+ # add missing tag if missing

+ if "" not in html_table:

+ html_table = html_table + " "

+ elif "" in html_table:

+ html_table = "" + html_table

+ elif "" not in html_table:

+ html_table = ""

+ else:

+ pass

+ # add and tags if missing

+ if '' not in html_table:

+ html_table = '' + html_table + ''

+ if '' not in html_table:

+ html_table = '' + html_table + ''

+ return html_table

+

+

+def get_anls(s1, s2):

+ try:

+ s1 = s1.lower()

+ s2 = s2.lower()

+ except:

+ pass

+ if s1 == s2:

+ return 1.0

+ iou = 1 - editdistance.eval(s1, s2) / max(len(s1), len(s2))

+ anls = iou

+ return anls

+

+

+def ocr_eval(references,predictions):

+ socre_=0.0

+ None_num=0

+ for idx,ref_value in enumerate(references):

+ pred_value = predictions[idx]

+ pred_values, ref_values = [], []

+ if isinstance(pred_value, str):

+ pred_values.append(pred_value)

+ else:

+ pred_values = pred_value

+ if isinstance(ref_value, str):

+ ref_values.append(ref_value)

+ else:

+ ref_values = ref_value

+

+ temp_score = 0.0

+ temp_num = len(ref_values)

+

+ for tmpidx, tmpref in enumerate(ref_values):

+ tmppred = pred_values[tmpidx] if tmpidx < len(pred_values) else pred_values[0]

+ if len(pred_values) == 1 and tmppred != "None" and "None" not in ref_values: # pred 1, and not None

+ temp_score = max(temp_score, get_anls(tmppred, tmpref))

+ temp_num = len(ref_values)

+ else:

+ if tmppred=='None' and tmpref!='None':

+ temp_score += 0.0

+ elif tmpref=='None':

+ temp_num -= 1

+ else:

+ temp_score += get_anls(tmppred, tmpref)

+ if temp_num == 0:

+ ocr_score = 0.0

+ None_num += 1

+ else:

+ ocr_score = temp_score / (temp_num)

+ socre_ += ocr_score

+ if None_num == len(references):

+ return 9999

+ else:

+ return round(socre_ / (len(references)-None_num), 5)

+

+

+def csv_eval(predictions,references,easy, pred_type='json'):

+ predictions = predictions

+ labels = references

+ def is_int(val):

+ try:

+ int(val)

+ return True

+ except ValueError:

+ return False

+

+ def is_float(val):

+ try:

+ float(val)

+ return True

+ except ValueError:

+ return False

+

+ def convert_dict_to_list(data):

+ """

+ Convert a dictionary to a list of tuples, handling both simple and nested dictionaries.

+

+ Args:

+ data (dict): The input dictionary, which might be nested or simple.

+

+ Returns:

+ list: A list of tuples generated from the input dictionary.

+ """

+ # print(data)

+ converted_list = []

+ for key, value in data.items():

+ # Check if the value is a dictionary (indicating a nested structure)

+ if isinstance(value, dict):

+ # Handle nested dictionary

+ for subkey, subvalue in value.items():

+ # converted_list.append((key, subkey, subvalue))

+ converted_list.append((key, subkey, re.sub(r'[^\d.-]', '', str(subvalue))))

+

+ else:

+ # Handle simple key-value pair

+ # converted_list.append((key, "value", value))

+ converted_list.append((key, "value", re.sub(r'[^\d.-]', '', str(value))))

+ return converted_list

+

+

+ def csv2triples(csv, separator='\\t', delimiter='\\n'):

+ lines = csv.strip().split(delimiter)

+ header = lines[0].split(separator)

+ triples = []

+ for line in lines[1:]:

+ if not line:

+ continue

+ values = line.split(separator)

+ entity = values[0]

+ for i in range(1, len(values)):

+ if i >= len(header):

+ break

+ #---------------------------------------------------------

+ temp = [entity.strip(), header[i].strip()]

+ temp = [x if len(x)==0 or x[-1] != ':' else x[:-1] for x in temp]

+ value = values[i].strip()

+ value = re.sub(r'[^\d.-]', '', str(value))

+ # value = value.replace("%","")

+ # value = value.replace("$","")

+ triples.append((temp[0], temp[1], value))

+ #---------------------------------------------------------

+ return triples

+

+ def csv2triples_noheader(csv, separator='\\t', delimiter='\\n'):

+ lines = csv.strip().split(delimiter)

+ maybe_header = [x.strip() for x in lines[0].split(separator)]

+ not_header = False

+ if len(maybe_header) > 2:

+ for c in maybe_header[1:]:

+ try:

+ num = float(c)

+ not_header = True

+ except:

+ continue

+ if not_header:

+ break

+ header = None if not_header else maybe_header

+ data_start = 0 if not_header and separator in lines[0] else 1

+ triples = []

+ for line in lines[data_start:]:

+ if not line:

+ continue

+ values = [x.strip() for x in line.split(separator)]

+ entity = values[0]

+ for i in range(1, len(values)):

+ try:

+ temp = [entity if entity[-1]!=':' else entity[:-1], ""]

+ except:

+ temp = [entity, ""]

+ if header is not None:

+ try:

+ this_header = header[i]

+ temp = [entity, this_header]

+ temp = [x if x[-1] != ':' else x[:-1] for x in temp]

+ except:

+ this_header = entity.strip()

+ value = values[i].strip()

+ value = re.sub(r'[^\d.-]', '', str(value))

+ # value = value.replace("%","")

+ # value = value.replace("$","")

+ triples.append((temp[0], temp[1], value))

+ #---------------------------------------------------------

+ return triples

+

+ def process_triplets(triplets):

+ new_triplets = []

+ for triplet in triplets:

+ new_triplet = []

+ triplet_temp = []

+ if len(triplet) > 2:

+ if is_int(triplet[2]) or is_float(triplet[2]):

+ triplet_temp = (triplet[0].lower(), triplet[1].lower(), float(triplet[2]))

+ else:

+ triplet_temp = (triplet[0].lower(), triplet[1].lower(), triplet[2].lower())

+ else:

+ triplet_temp = (triplet[0].lower(), triplet[1].lower(), "no meaning")

+ new_triplets.append(triplet_temp)

+ return new_triplets

+

+ def intersection_with_tolerance(a, b, tol_word, tol_num):

+ a = set(a)

+ b = set(b)

+ c = set()

+ for elem1 in a:

+ for elem2 in b:

+ if is_float(elem1[-1]) and is_float(elem2[-1]):

+ if ((Levenshtein.distance(''.join(elem1[:-1]),''.join(elem2[:-1])) <= tol_word) and (abs(elem1[-1] - elem2[-1]) / (abs(elem2[-1])+0.000001) <= tol_num))or \

+ ((''.join(elem1[:-1]) in ''.join(elem2[:-1])) and (abs(elem1[-1] - elem2[-1]) / (abs(elem2[-1])+0.000001) <= tol_num)) or \

+ ((''.join(elem2[:-1]) in ''.join(elem1[:-1])) and (abs(elem1[-1] - elem2[-1]) / (abs(elem2[-1])+0.000001) <= tol_num)):

+ c.add(elem1)

+ else:

+ if (Levenshtein.distance(''.join([str(i) for i in elem1]),''.join([str(j) for j in elem2])) <= tol_word):

+ c.add(elem1)

+ return list(c)

+

+ def union_with_tolerance(a, b, tol_word, tol_num):

+ c = set(a) | set(b)

+ d = set(a) & set(b)

+ e = intersection_with_tolerance(a, b, tol_word, tol_num)

+ f = set(e)

+ g = c-(f-d)

+ return list(g)

+

+ def get_eval_list(pred_csv, label_csv, separator='\\t', delimiter='\\n', tol_word=3, tol_num=0.05, pred_type='json'):

+

+ if pred_type == 'json':

+ pred_triple_list=[]

+ for it in pred_csv:

+ pred_triple_temp = convert_dict_to_list(it)

+ pred_triple_pre = process_triplets(pred_triple_temp)

+ pred_triple_list.append(pred_triple_pre)

+ else:

+ pred_triple_list=[]

+ for it in pred_csv:

+ pred_triple_temp = csv2triples(it, separator=separator, delimiter=delimiter)

+ # pred_triple_temp = csv2triples_noheader(it, separator=separator, delimiter=delimiter)

+ pred_triple_pre = process_triplets(pred_triple_temp)

+ pred_triple_list.append(pred_triple_pre)

+

+ label_triple_list=[]

+ for it in label_csv:

+ label_triple_temp = convert_dict_to_list(it)

+ label_triple_pre = process_triplets(label_triple_temp)

+ label_triple_list.append(label_triple_pre)

+

+

+ intersection_list=[]

+ union_list=[]

+ sim_list=[]

+ # for each chart image

+ for pred,label in zip(pred_triple_list, label_triple_list):

+ for idx in range(len(pred)):

+ try:

+ if label[idx][1] == "value" and "value" not in pred[idx][:2]:

+ pred[idx] = (pred[idx][0], "value", pred[idx][2])

+ temp_pred_head = sorted(pred[idx][:2])

+ temp_gt_head = sorted(label[idx][:2])

+ pred[idx] = (temp_pred_head[0], temp_pred_head[1], pred[idx][2])

+ label[idx] = (temp_gt_head[0], temp_gt_head[1], label[idx][2])

+ except:

+ continue

+ intersection = intersection_with_tolerance(pred, label, tol_word = tol_word, tol_num=tol_num)

+ union = union_with_tolerance(pred, label, tol_word = tol_word, tol_num=tol_num)

+ sim = len(intersection)/len(union)

+ intersection_list.append(intersection)

+ union_list.append(union)

+ sim_list.append(sim)

+ return intersection_list, union_list, sim_list

+

+ def get_ap(predictions, labels, sim_threhold, tolerance, separator='\\t', delimiter='\\n', easy=1):

+ if tolerance == 'strict':

+ tol_word=0

+ if easy == 1:

+ tol_num=0

+ else:

+ tol_num=0.1

+

+ elif tolerance == 'slight':

+ tol_word=2

+ if easy == 1:

+ tol_num=0.05

+ else:

+ tol_num=0.3

+

+ elif tolerance == 'high':

+ tol_word= 5

+ if easy == 1:

+ tol_num=0.1

+ else:

+ tol_num=0.5

+ intersection_list, union_list, sim_list = get_eval_list(predictions, labels, separator=separator, delimiter=delimiter, tol_word=tol_word, tol_num=tol_num, pred_type=pred_type)

+ ap = len([num for num in sim_list if num >= sim_threhold])/(len(sim_list)+1e-16)

+ return ap

+

+ map_strict = 0

+ map_slight = 0

+ map_high = 0

+ s="\\t"

+ d="\\n"

+

+ for sim_threhold in np.arange (0.5, 1, 0.05):

+ map_temp_strict = get_ap(predictions, labels, sim_threhold=sim_threhold, tolerance='strict', separator=s, delimiter=d, easy=easy)

+ map_temp_slight = get_ap(predictions, labels, sim_threhold=sim_threhold, tolerance='slight', separator=s, delimiter=d, easy=easy)

+ map_temp_high = get_ap(predictions, labels, sim_threhold=sim_threhold, tolerance='high', separator=s, delimiter=d, easy=easy)

+ map_strict += map_temp_strict/10

+ map_slight += map_temp_slight/10

+ map_high += map_temp_high/10

+

+ em = get_ap(predictions, labels, sim_threhold=1, tolerance='strict', separator=s, delimiter=d, easy=easy)

+ ap_50_strict = get_ap(predictions, labels, sim_threhold=0.5, tolerance='strict', separator=s, delimiter=d, easy=easy)

+ ap_75_strict = get_ap(predictions, labels, sim_threhold=0.75, tolerance='strict', separator=s, delimiter=d, easy=easy)

+ ap_90_strict = get_ap(predictions, labels, sim_threhold=0.90, tolerance='strict', separator=s, delimiter=d, easy=easy)

+ ap_50_slight = get_ap(predictions, labels, sim_threhold=0.5, tolerance='slight', separator=s, delimiter=d, easy=easy)

+ ap_75_slight = get_ap(predictions, labels, sim_threhold=0.75, tolerance='slight', separator=s, delimiter=d, easy=easy)

+ ap_90_slight = get_ap(predictions, labels, sim_threhold=0.90, tolerance='slight', separator=s, delimiter=d, easy=easy)

+ ap_50_high = get_ap(predictions, labels, sim_threhold=0.5, tolerance='high', separator=s, delimiter=d, easy=easy)

+ ap_75_high = get_ap(predictions, labels, sim_threhold=0.75, tolerance='high', separator=s, delimiter=d, easy=easy)

+ ap_90_high = get_ap(predictions, labels, sim_threhold=0.90, tolerance='high', separator=s, delimiter=d, easy=easy)

+

+

+ return em, map_strict, map_slight, map_high, ap_50_strict, ap_75_strict, ap_90_strict, ap_50_slight, ap_75_slight, ap_90_slight, ap_50_high, ap_75_high, ap_90_high

+

+def draw_SCRM_table(em, map_strict, map_slight, map_high, ap_50_strict, ap_75_strict, ap_90_strict, ap_50_slight, ap_75_slight, ap_90_slight, ap_50_high, ap_75_high, ap_90_high,title_ocr_socre,source_ocr_socre,x_title_ocr_socre,y_title_ocr_socre,structure_accuracy):

+

+ result=f'''

+ -----------------------------------------------------------\n

+ | Metrics | Sim_threshold | Tolerance | Value |\n

+ -----------------------------------------------------------\n

+ | | | strict | {'%.4f' % map_strict} | \n

+ | | ----------------------------\n

+ | mPrecison | 0.5:0.05:0.95 | slight | {'%.4f' % map_slight} |\n

+ | | ---------------------------\n

+ | | | high | {'%.4f' % map_high} |\n

+ -----------------------------------------------------------\n

+ | | | strict | {'%.4f' % ap_50_strict} |\n

+ | | ---------------------------\n

+ | Precison | 0.5 | slight | {'%.4f' % ap_50_slight } |\n

+ | | ---------------------------\n

+ | | | high | {'%.4f' % ap_50_high } |\n

+ -----------------------------------------------------------\n

+ | | | strict | {'%.4f' % ap_75_strict} |\n

+ | | ---------------------------\n

+ | Precison | 0.75 | slight | {'%.4f' % ap_75_slight} |\n

+ | | ---------------------------\n

+ | | | high | {'%.4f' % ap_75_high} |\n

+ -----------------------------------------------------------\n

+ | | | strict | {'%.4f' % ap_90_strict} |\n

+ | | ---------------------------\n

+ | Precison | 0.9 | slight | {'%.4f' % ap_90_slight } |\n

+ | | ---------------------------\n

+ | | | high | {'%.4f' % ap_90_high} |\n

+ -----------------------------------------------------------\n

+ |Precison(EM) | {'%.4f' % em} |\n

+ -----------------------------------------------------------\n

+ |Title(EM) | {'%.4f' % title_ocr_socre} |\n

+ -----------------------------------------------------------\n

+ |Source(EM) | {'%.4f' % source_ocr_socre} |\n

+ -----------------------------------------------------------\n

+ |X_title(EM) | {'%.4f' % x_title_ocr_socre} |\n

+ -----------------------------------------------------------\n

+ |Y_title(EM) | {'%.4f' % y_title_ocr_socre} |\n

+ -----------------------------------------------------------\n

+ |structure_acc| {'%.4f' % structure_accuracy} |\n

+ -----------------------------------------------------------\n

+

+

+ '''

+ return result

+

+

+if __name__ == '__main__':

+ import json

+ import pprint

+

+ # markdown structure for Table Parsing task

+ pred_markdown = "| 1 | august 5 , 1972 | detroit lions | l 23 - 31 | 0 - 1 |\n| 2 | august 12 , 1972 | green bay packers | l 13 - 14 | 0 - 2 |\n| 3 | august 19 , 1972 | cincinnati bengals | w 35 - 17 | 1 - 2 |\n| 4 | august 25 , 1972 | atlanta falcons | w 24 - 10 | 2 - 2 |\n| 5 | august 31 , 1972 | washington redskins | l 24 - 27 | 2 - 3 |\n| 6 | september 10 , 1972 | minnesota vikings | w 21 - 19 | 3 - 3 |"

+ true_markdown = "| week | date | opponent | result | record |\n| --- | --- | --- | --- | --- |\n| 1 | august 5 , 1972 | detroit lions | l 23 - 31 | 0 - 1 |\n| 2 | august 12 , 1972 | green bay packers | l 13 - 14 | 0 - 2 |\n| 3 | august 19 , 1972 | cincinnati bengals | w 35 - 17 | 1 - 2 |\n| 4 | august 25 , 1972 | atlanta falcons | w 24 - 10 | 2 - 2 |\n| 5 | august 31 , 1972 | washington redskins | l 24 - 27 | 2 - 3 |\n| 6 | september 10 , 1972 | minnesota vikings | w 21 - 19 | 3 - 3 |"

+ teds = TEDS(n_jobs=4)

+ pred_table_html = convert_markdown_table_to_html(pred_markdown)

+ true_table_html = convert_markdown_table_to_html(true_markdown)

+

+ scores = teds.evaluate(pred_table_html, true_table_html)

+

+ pp = pprint.PrettyPrinter()

+ pp.pprint(scores)

+

+ # dict structure for Key Information Extraction task

+ pred_dict = {

+ "company": [

+ "OLD TOWN "

+ ],

+ "date": [

+ "2024"

+ ],

+ "address": [

+ "SRI RAMPAI"

+ ],

+ "total": [

+ "30"

+ ]

+ }

+ true_dict = {

+ "company": [

+ "OLD TOWN KOPITAM SND BHD"

+ ],

+ "date": [

+ "2024/9/27"

+ ],

+ "address": [

+ "SRI RAMPAI"

+ ],

+ "total": [

+ "30"

+ ]

+ }

+ teds = TEDS(n_jobs=4)

+ pred_dict_html = dict_to_html(pred_dict)

+ true_dict_html = dict_to_html(true_dict)

+ print(pred_dict_html)

+ print(true_dict_html)

+

+ scores = teds.evaluate(pred_dict_html, true_dict_html)

+

+ pp = pprint.PrettyPrinter()

+ pp.pprint(scores)

diff --git a/OCRBench_v2/eval_scripts/__pycache__/IoUscore_metric.cpython-310.pyc b/OCRBench_v2/eval_scripts/__pycache__/IoUscore_metric.cpython-310.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..f757f09de7f464dfd55fc975dd4aa457420272d7

GIT binary patch

literal 2739

zcma)7&2J>d6|bu9>FMeD@W&eP!bTc`LUt0g21^kMScU*m;1Hw4gebkXTFq2>+;LC$

zY<16KkGdt0S7MQJ$t9O0k~g=_f&V1`K%bCuNPI)$#v$bQs>fp+ktp4&SFc`Gy;t=<

zewB8+9>e$9-_y}M;Qm2_tDlX*>uA~MAd*QQGr#V+&&^o)!t|DJp%<||Zukw(q$TYK

z%y(o%I_R6SDP8ogYRT4-(24S-cgi^%G1>kr_uJ?@=sU_$ZPhsyeitjcs{1GQ7eVVV

z>QOJ3R9i0pS@=DmQfPsO4DXTzS`M1Ovh>?j*`*AbYr5^->G4~@r!AkN8?n6@w;#SY6I;Jl?)Gq

z`#xT4$A#IVQk2n(EE*gbR<#Ab3vQb(n

zE2lu(gIa6sDJ0*Zxib*|gmLrfC(u<+m}DO+T{Y`IgOlMa6OvKA?(^3Mjmkb!Cz+=3

zR92YfRVR)R0i+h?m$~l1shSh5WHikERx}<_j)j@utrKpTB4SDId-afP_{~us7-nU@

z&x``fuy01<7U9l^QGOUq(kvqAxq?E^;#Ap_Ag<^j7#;3%!F%{AM9W-%2UFLwm6DFK

zk`gWZ8;Imoia9?M1rX*DkhK&e2E4IOSqUt8i|xNmj?NoJAm_aOqwvEr;%bN5yX

zOYasIp;74pB=9BxUFs0dUcI|_d++Z4UiO3Ad-8_^^e_A${N265-v0hIKTtTSCNpZc

zK|Ur}S}O5^72Jkz)0H@zayC)=|YG9cJiT(u-?wD8&gr@qac=FBAwYEko

zQO6U#y+vlop7`i_HhW$j=Q4HvGno~

zi-Qe;u8y&l)w2sAdY!b9FV@-Y`~Uk?3-mkx8sEUHY?4$$tI9oy5=li-S((ZSy(XF}

zzH&x7olY{;O&p`#CKHuN45TDn%V7_76Xw{MRLyai4-Zx5x9fwNT9`isSwLzVW<@1{

zhT;V6coY4m-;Pm~wI}DdqoSx$?8diT=|#FSVVP|Kvrr~3e^NY+@^OJ^p76N%$cD`2

zz_K6FCEOqwhfxv)v;NPH!|^0m{ex8Z--GV{&v8FoJsI^;@JDwB_W33me;MTbkHq~L

z1X7B>{s~EcPuwdYvo@ZjzQKf7`m^V*s($~MKkZY+_b)2G);_!S-l6Kx;iMmB{kK&d

zr~NmkQbsDnv(cQ-Y08gT_u906l?@WTUTqTGo

literal 0

HcmV?d00001

diff --git a/OCRBench_v2/eval_scripts/__pycache__/TEDS_metric.cpython-310.pyc b/OCRBench_v2/eval_scripts/__pycache__/TEDS_metric.cpython-310.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..e5a2b3acf69174638bc726e78ffd9becbb003f55

GIT binary patch

literal 27162

zcmeHwdz2hkT3^?ryQ_P8o*Ipwmdo-(8d+~!mZg;?%aY}_vBzFZ-bd}&y{)O5QA_<8

z-Kvp1?doJ<43Y`nWg#0LyPJ$Q;ebg_P7aTALK04L5|TeSgup^_NQDpr1TX=zVL2Nx

zu+H!I-Rjq9WOji~Hs@sIzIEUC*1g~T-nZNw9xi6^_vX(u7k=~;nar;<(f^l4;&J@K

zOJ*kHXMCfU@$}R13`v`wDNoC@TRuW^S0Hud)w3UySyDTx4XT&<>`9vQyG8AFTI}eOTo~J<=unyus?$I

zNRUT*C(@(-7}8_Gy-44;keN69aev}g>f)@2LN<_o#ow

zI~LsMKjI&K)9{W54+M{{7~cEvJcj3q;P~oe!TW*}w@mM3aCG(5t&I10@c8P{;N&d>

z>-Ok^;UB~AIDYTL@5EUR-PR5

zOmOxNV;biHVdpc`kH1YAoS8P0?CBRSJb5cov4zl#ZG={2$JrH@

zW&OT~@p$K%1DBfh;6Sa~SeRcrfMvWK%tZ&DTdGCXdeg7eoJA5FxR;iW4IaOVZ!UNd2^W{1=r#iQP5sO+bR~yx+T)qcAheSI$L;o8w

zS`*z>+25%)XP`>wk88};I)h*M0)jYm8L)E2R1-c{=rYq-lgo@-^Y&DxZ8WkzR_$_z

zaXjs_nU|fmiB&aMv$rxwk$ZKkZ?EQJ;}&5q*ShEYrRH^)u;ea{%cLvbotr>Y7r7nIU

zq1ee3jZ$7Q@6Kn1^X#0J!14{>d_4n*w&y`FZS(la$UZg;BGv(ZE2j

zgc2J+IX5GSM48Bl00%4P3is7)-TPD!x%JAc)%sH1U2KM76$j7-q%H+TH~pKLwh{|<

z7%V1M4QIqi3SoNW!@ig1N+oK+K8+WSJ_D8K(-6H9mn?

zC*U%+4Tl<&zK4_UU?+E8HS6v{H)^_v`lf6r+4)+tf`i?u=nd6^Ye6Ht6b03W+Jhmc

zO@SOWjriKP*w7$w)vB$)?;^tYp`N_aW~=26ywN(9=lC|AKgPKcXYdPOL=YP|mgeQm

z6)T)T90%{Rk;YBL%`29gBvNEO-^5Ygg_srIjWP@I%&N^aQ@2bI1`F$wZEZi(tOKtH

zp<9W7SuQR`h&Jct*oMD_QStZ1Q|c}}(i90vcHN`450yG6E8776Ka|Mh@Y;pKvEwm#

z9QDHzg8NJxC#qzO8Me{dFtAFxCJ*;cR8;pexDP>60-=ejbHIm}n!ap};`r(?gWhqH

zn4ISOQSdN+f-0Sii4mP_DXW-2hu^>-7yohm!V?HO=gKGb8I_a7la==!IayH@#n7z-54aZtx6*K{plu6*au-xki7p`$T|(|55Vv9#_&X)+7OEv9VWhw`

zEUBQ}Y}A&MobD|tRTlu(L7AH$68lBXD>y#o^0cjw&7&Aw9b<5uL2sXAi-;7T!%wzo

zYf8<4YKOUkJwnEF-}&R3bWh3mB0&K&IPqv*u_9C`^5d>$r;aDYFue$PaHr(4&Jn*h(?lJWpOcR_;U8Z

zd4U+JP0Sx!2%-)vk~p&D7y~sb)mrB~+T4=FXsliGQ|RCVej&M*3DCAloWl~%#sp%U

z4d>l-@jJZ^peRk9QlR_-I>2&hF5*!vnxTdkGHt6dF5t(-1wxh$0bfCG?pw_*KuWhz

zC(5#A1m59teii4&&)xzkchfnYj;#edDy(EaZ@ifKM5b*whIKofMEn~p!=cDy%by3j

zE3OX3b_xibouw++^pb44)6QaE!*LchOlR#k!TUR-u7i&5Zr_f$4ej@;Yt;!l{v#5g4U;mq`i#-nm7xW$Uw

zk=S$1#bu%Q>L}7)PNU|;!agg9)ky3VQIk4}AQ=JG^2_Ac%Vf8vbNaBIW?7Edoh&P1

zEpZybbvd2t3ASEy{zynG_=S5B6m8)qO@cxWsCmpV4fD@ztN3SIvyOmB35<{flx@G;

z+8`%%a2f-kLjJUSPX7>z*id$ChB;EK2*5&s@a;EE00Y@hu;_jkDMM0bnnM1n<>zi0

z>Q%6vomiUZ=eLkGP4guMpu23VXQJ$7fYySrtr~cYjO3YX^77KZbIVvU+IEzezH}-Z

zIS?1JD`qVR|zkEPY

zlOhRf+4~P1*rWC%N?6U~nG|^jUXc1pk$eJ4aonNj-=P(?!jr+guPJGqn^b5(lr;(DVgjXyEr!tl;I-

zGOxgcplj%;v?pAz0eUP1S=UMwv`{FLqGQ{DZ2p_H80LTqCna43V&r)Mqhh9rvD110

z+PMH+EmEiOGJ>1tYKDmJCipfHvo_tx+%RsK^Po%+YaKAyph#!Iw*swgM41T%2$=WR

zo!FAxY-Gh*v<7O_efYUIvw+bokfascWX{_*k=C?Rc>sI&R{&;RSR#;Bz^j1B5c)Kv

z^~e^B9|r_g>Y>XmDPInojpOcXyUsoR?2|L6pL=rGad($s&NI)SeR9`ccmMwV?Sala

zt+_|*wSH|Eaob=w?e+R;E=7Q*wPVXcJAdZ+7jGL}5c6#^1yL*|`H)~s;`Q=a+qh}0

zW^RJBh@pjSiX6Z=ZAtP2$BgDNoK;w3gw

zU}CkZiydOt9ns6D0GQ+id4@@@+Q6=-Lgk0phb-dA;6i;G1tA4mCtozS<7iLeNK@We

zXHloEa{?MOY61iO&ET@yl+tN0!DQkwJyF%jZ17IU2KD|f^Nq8OvblAXNe}Jgq*C@

zF#Me4fPMAzI%hQlZJKin#5JZwU#vU@gdqp=DIp~lmyje~*hSssc$=Luh=!4S`8Y@w>F^5xo5}+J~qZ3Z$qxKVO}zR%)p4

z2ORl@rHjz7%s^=G>!jDgUKe7N-vlEKT@o5K!m1C6W!H)5(#c&ebd5o+I|dF%6C4`J

zpS#p(1as6~R@AZ!ogSj@^=fpf3S!&GlMCe~e_e5R6?eH6@ava?Wz?+IASTU2(*ech

zy6^PPVR}1XK>~%

z=x9~2Si@AOZEr*>ma!vc?vXdbnxL_0UB(_j=Bz{&7JCl)J?8a+q8y7YSS@n1X{)HI

z8AsIoK|q6*<`k8OhIWw?tiPu1|_p(ILJ|pC>{WlEHcOo

z2_hr;(1?s1hmE~!)@+mK2frVgD`vPsb3$$KMc6_D4iOhXgvc$n5oSLpi0vvkdX3ry

ziREXMkG@q6K?_^V^CqXQAg4{DLppi@MMX7_im*>)t5Hx76*VeY{75DC&$ULP!fH|1jUX*YB`B62Pg@P4)o?U2Z!SN(

zrrn~S#ktI^j>cd@K~M6n@i>o7En!ogb|EfsMi84EK2Kz>dYA+3m;6@+>i7u6qSy|j

zDxR$pXj1-lq*uoX7&x)T4`t%wd8ys2KV7KY-Jw<@g$QbPcclxuE2Tt&z*PVZRRfw$

zD1Jqt0@Bwf^|>mv)}UQD%1aFqe&p-}`f1)F{etStuYrUuz-AH5FV$*jDVPI;f{}{?

z6&{!LKH+)Oy>xeDL#L0s7oZwQXGA#;nH-JXS75V2l)3}WtjgTdzF*W6?sUvqA9qjp

z&E6H7GzSHPR$;T7bewb${eJJ<4dk=?)bYh-Xh$0{8S?28

z>)1zk&hC2o)b5YYUY`wLesEeRXTv=oJ^t9se5A#%%r^ES`dYhNX8>$1^u}{et})z+

zph~WJ7+_$KS>aj735Sfr*mn!RET{*ZWF57YLhzQQUcw_Wt|Z2_wTP*Bf{P&xF;9h>

zMa|Z(4z|Mj^5C&bA3NM_F#{#6sRHneK}F47N^)nPzi|55XOrU7Pt8CmICJ{^lS!5`

z_M*%NC;;n~WU0*vlhJ3+KR_E4{I^2HC*45BLz

zonOX&zp4}Y`Dz0OzM8so0O&nbbB{0-|Y)Gwo6_$&guGzGjQ*c2bbD+H;UCC2t1C5QTp7l#@2dJq8L{}12XDxqZ0M$9+D@0N^=(Iq$2ImdP

zf^ojfR*Y62{>vk!_Y2AaM;nAZ-~?Zq%2gFSDm6j%_eNU(Z(=I7448m$NdO)I9P&%#

zE(N6!lr`YM>;>5ew9dQ*6BZPPRC#1)S|hIjTg_g75aDC^3GXaO4(hG!9MosD`TjL@

z11i6CQO-Feb|dt*UBe0s|6Bo0SZGi?);Wx8&(Rhl@s-hxB|Cmz_Vsr*1h}n>jIhb1=XhzU`4-+@j*nOz6p5;

zI90AL+yoAP6%$>nI5gVAy=ptsxeK>VmnVG#e&OQ?V5I`)#~B-wvxA94qa~Y15>L%9

z$}N=dka8NUQLf8k>^-)2V1N#xkpcK}LTCydLW4sIBGX4;^a8X{5pq;1^;;&#cND*aupM{@>d&DADw$gC$35fx01Dwr6mX01T#?G-jsD4`wH

zg1Y)N>gzI@tcTUpI>Z1F

z2oM9ab>M4Uwdqj}uHVj)KhEHr89cy1^u^>1lB^=(RNuzj8w`3Ay~*SotW>zLyr_vT

zR5hfm>TfXk1cNCC-_3x)?~T+zzRPsj0Lg8JiX>LO$w1&i7JE=kPMM3|8HSgfHoQ#=6gr|C)^t=;PAm>7rv=JsI*x6s&tbFy@St>5!cS=AS@eDrsvVpRgC{5a

z7Vu9d#p<@v%10Kh_>6;I9R|I!E@zjY)U>KKE)2hvc3VH#%R`GPv~Z#VSotS(o2Up>

zVtgj^IUJh~ldT!^>#2Cq?dd$~LXd{#1O__VnIR~M8EwUQ!+_{+-h}@8Ftphsl)oGeMI*6EKaF~zQ^QOlPI!h%`~gr7*G7wl{wf_cl-r@V}+hzp*{

z=@)*>P%k5Q4X(KkcvRnm{MK}5^=L%uE?6J(>*AB!W_U;Bws{0~JNL

zE3|XX#M+$L_hDkUjYhVC>!JP*13A~LjNM}Jce{CAbh^@c#TnqF9)1zYWQEmVXYj2I