+  +

+

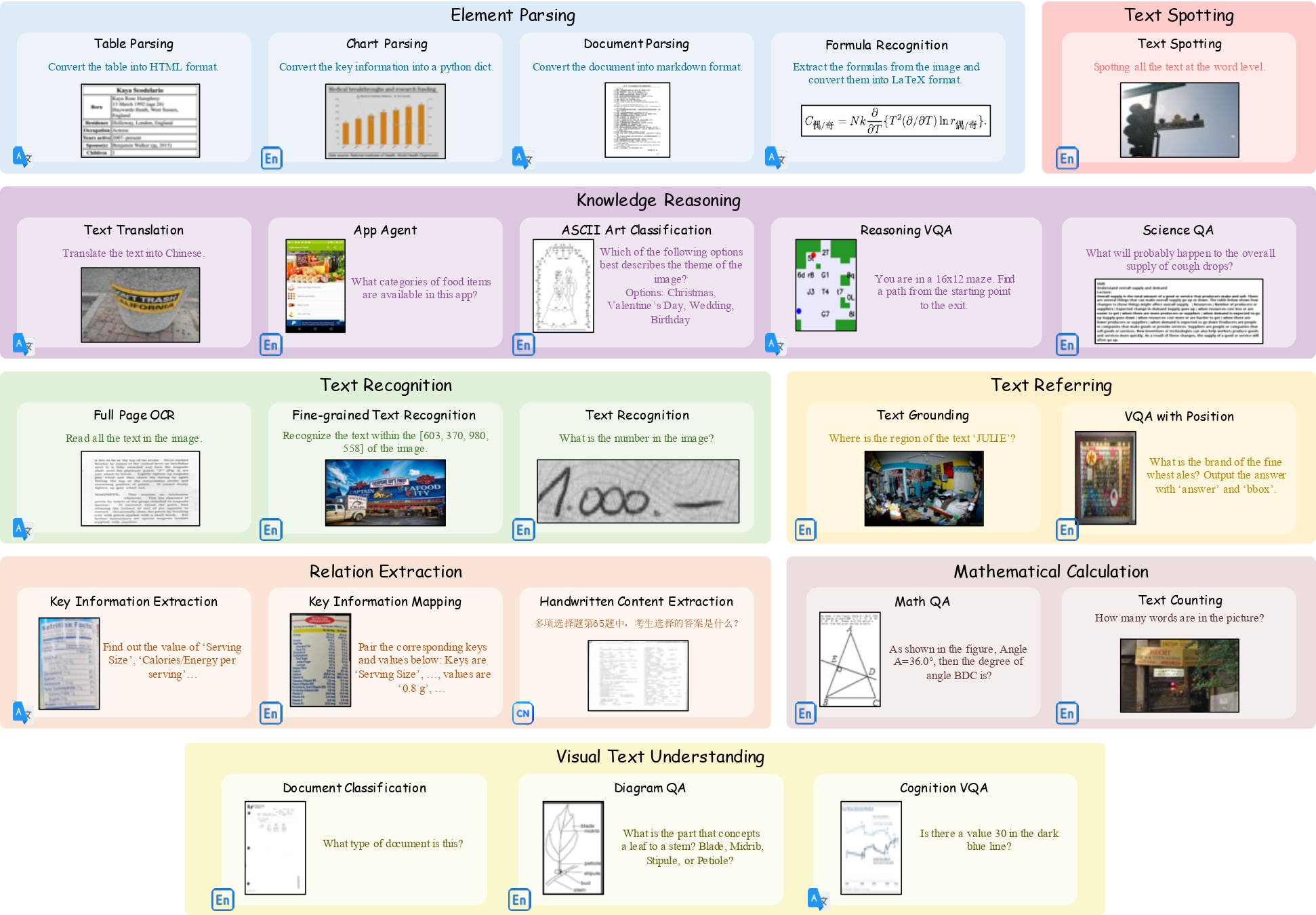

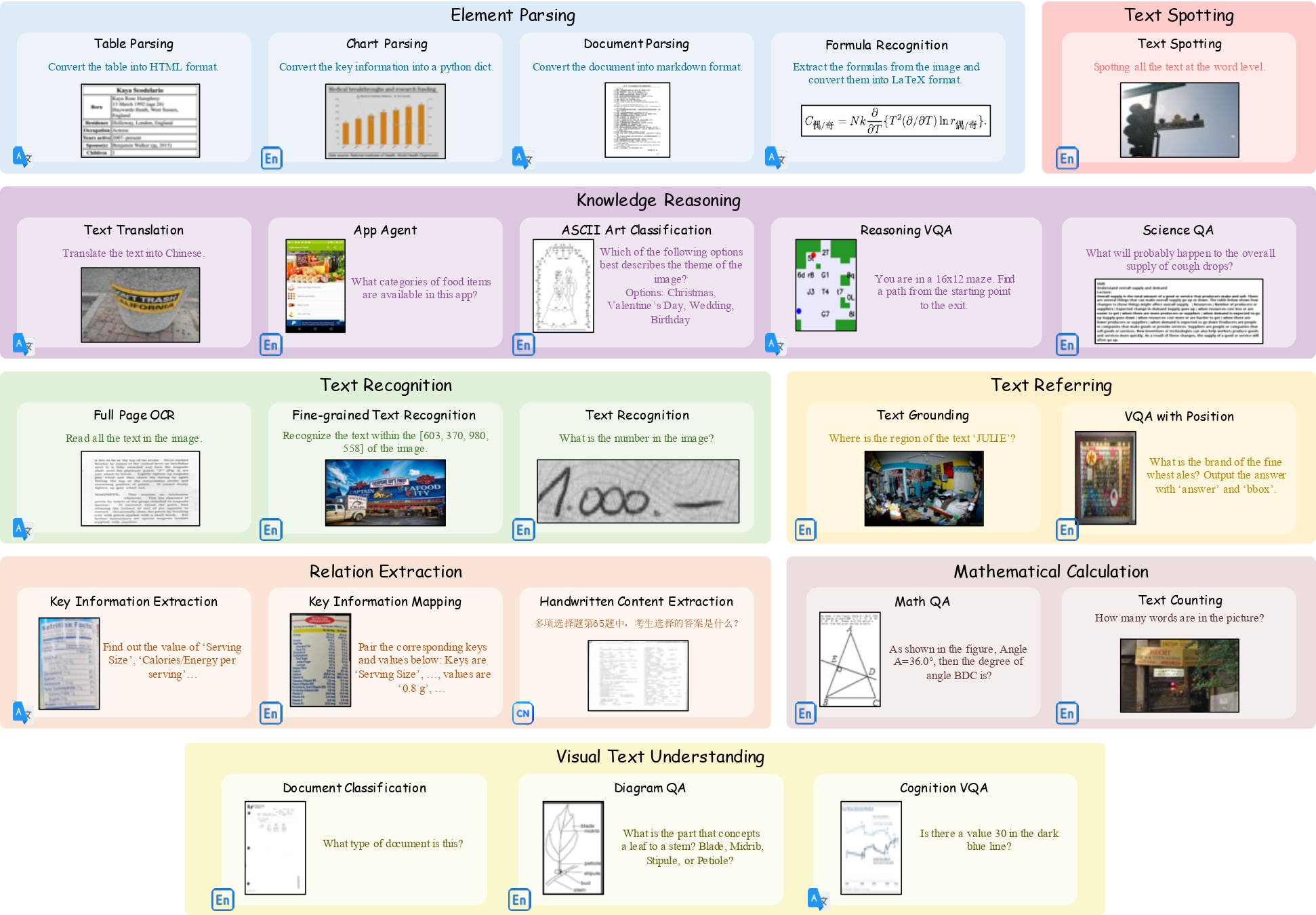

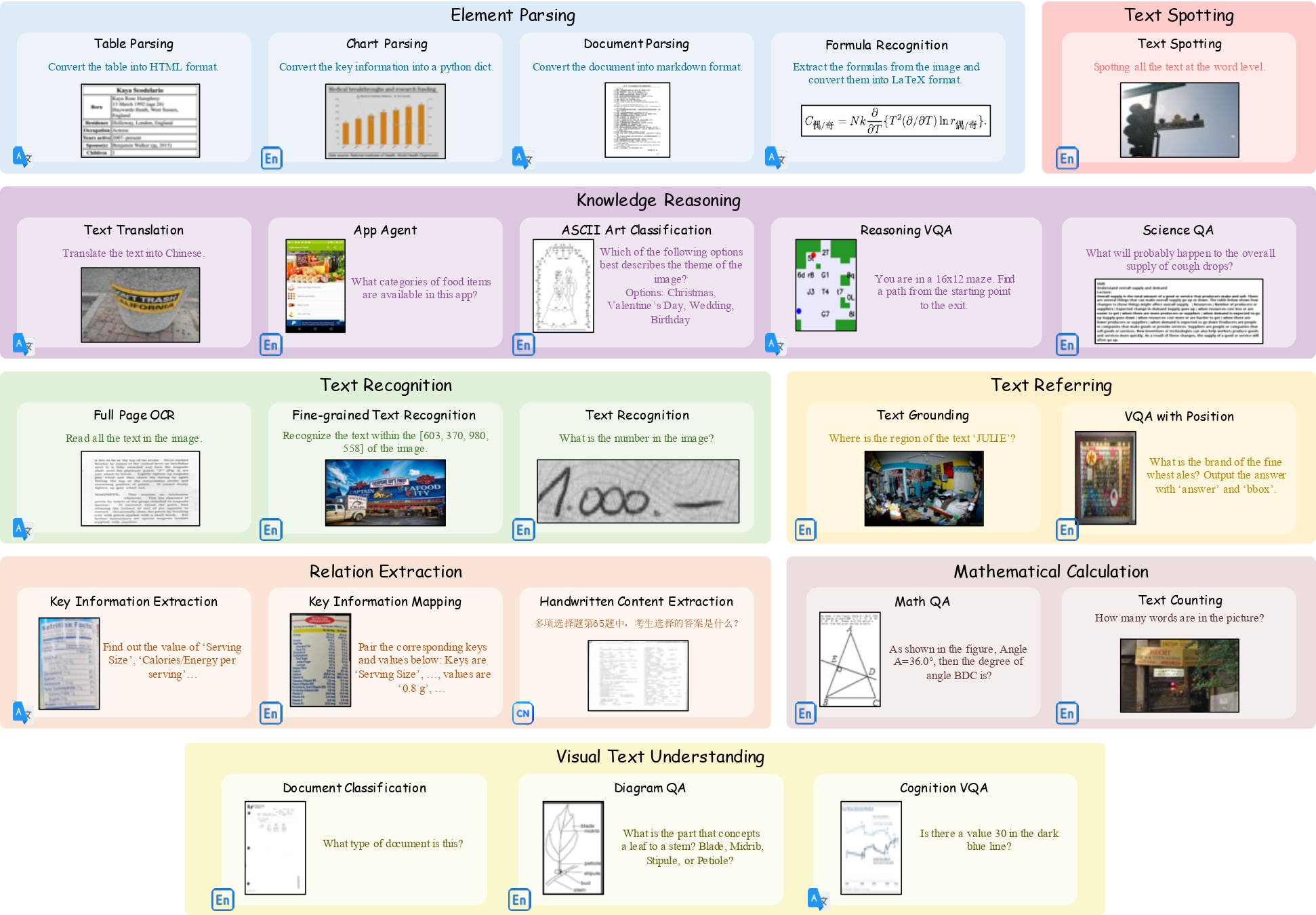

**OCRBench** is a comprehensive evaluation benchmark designed to assess the OCR capabilities of Large Multimodal Models. It comprises five components: Text Recognition, SceneText-Centric VQA, Document-Oriented VQA, Key Information Extraction, and Handwritten Mathematical Expression Recognition. The benchmark includes 1000 question-answer pairs, and all the answers undergo manual verification and correction to ensure a more precise evaluation. More details can be found in [OCRBench README](./OCRBench/README.md).

@@ -33,12 +33,6 @@ OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Vis

-  -

-

- # News * ```2024.12.31``` 🚀 [OCRBench v2](./OCRBench_v2/README.md) is released. * ```2024.12.11``` 🚀 OCRBench has been accepted by [Science China Information Sciences](https://link.springer.com/article/10.1007/s11432-024-4235-6). From 00df3ca6ccee94a164cf81dfce7acf19f6f3890c Mon Sep 17 00:00:00 2001 From: qywh2023 <134821122+qywh2023@users.noreply.github.com> Date: Fri, 20 Jun 2025 20:45:43 +0800 Subject: [PATCH 11/16] Update README.md --- README.md | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/README.md b/README.md index f6bcbe2..e3c502f 100644 --- a/README.md +++ b/README.md @@ -5,7 +5,7 @@ OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning -[](https://99franklin.github.io/ocrbench_v2/) +[](https://99franklin.github.io/ocrbench_v2/) [](https://arxiv.org/abs/2501.00321) [](https://huggingface.co/datasets/ling99/OCRBench_v2) [](https://github.com/Yuliang-Liu/MultimodalOCR/issues?q=is%3Aopen+is%3Aissue) From fa5d812fea4f84c6e712e173e00709d82928f880 Mon Sep 17 00:00:00 2001 From: qywh2023 <134821122+qywh2023@users.noreply.github.com> Date: Fri, 20 Jun 2025 20:47:45 +0800 Subject: [PATCH 12/16] Update README.md --- README.md | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/README.md b/README.md index e3c502f..74e4dc3 100644 --- a/README.md +++ b/README.md @@ -5,7 +5,7 @@ OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning -[](https://99franklin.github.io/ocrbench_v2/) +[](https://99franklin.github.io/ocrbench_v2/) [](https://arxiv.org/abs/2501.00321) [](https://huggingface.co/datasets/ling99/OCRBench_v2) [](https://github.com/Yuliang-Liu/MultimodalOCR/issues?q=is%3Aopen+is%3Aissue) From 1b7fe0b2bf908f43ebc583d4571f2877668aef04 Mon Sep 17 00:00:00 2001 From: qywh2023 <134821122+qywh2023@users.noreply.github.com> Date: Fri, 20 Jun 2025 20:49:41 +0800 Subject: [PATCH 13/16] Update README.md --- README.md | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/README.md b/README.md index 74e4dc3..91c27b8 100644 --- a/README.md +++ b/README.md @@ -5,7 +5,7 @@ OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning -[](https://99franklin.github.io/ocrbench_v2/) +[](https://99franklin.github.io/ocrbench_v2/) [](https://arxiv.org/abs/2501.00321) [](https://huggingface.co/datasets/ling99/OCRBench_v2) [](https://github.com/Yuliang-Liu/MultimodalOCR/issues?q=is%3Aopen+is%3Aissue) From b115d52e67bf5e51852c02187af281394fe115b8 Mon Sep 17 00:00:00 2001 From: qywh2023 <134821122+qywh2023@users.noreply.github.com> Date: Fri, 20 Jun 2025 21:02:29 +0800 Subject: [PATCH 14/16] Update README.md --- README.md | 6 ++++++ 1 file changed, 6 insertions(+) diff --git a/README.md b/README.md index 91c27b8..2a87f67 100644 --- a/README.md +++ b/README.md @@ -26,6 +26,12 @@ OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Vis

+

+> **OCRBench: On the Hidden Mystery of OCR in Large Multimodal Models**

+> Yuliang Liu, Zhang Li, Mingxin Huang, Biao Yang, Wenwen Yu, Chunyuan Li, Xucheng Yin, Cheng-lin Liu, Lianwen Jin, Xiang Bai

+[](https://arxiv.org/abs/2305.07895)

+[](https://github.com/qywh2023/OCRbench/blob/main/OCRBench/README.md)

+

**OCRBench** is a comprehensive evaluation benchmark designed to assess the OCR capabilities of Large Multimodal Models. It comprises five components: Text Recognition, SceneText-Centric VQA, Document-Oriented VQA, Key Information Extraction, and Handwritten Mathematical Expression Recognition. The benchmark includes 1000 question-answer pairs, and all the answers undergo manual verification and correction to ensure a more precise evaluation. More details can be found in [OCRBench README](./OCRBench/README.md).

From 594a5727c737511f4a72fcb856b7ef4a15aaef20 Mon Sep 17 00:00:00 2001

From: qywh2023 <134821122+qywh2023@users.noreply.github.com>

Date: Fri, 20 Jun 2025 21:03:01 +0800

Subject: [PATCH 15/16] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 2a87f67..5d8467b 100644

--- a/README.md

+++ b/README.md

@@ -30,7 +30,7 @@ OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Vis

> **OCRBench: On the Hidden Mystery of OCR in Large Multimodal Models**

> Yuliang Liu, Zhang Li, Mingxin Huang, Biao Yang, Wenwen Yu, Chunyuan Li, Xucheng Yin, Cheng-lin Liu, Lianwen Jin, Xiang Bai

[](https://arxiv.org/abs/2305.07895)

-[](https://github.com/qywh2023/OCRbench/blob/main/OCRBench/README.md)

+[](https://github.com/qywh2023/OCRbench/blob/main/OCRBench/README.md)

**OCRBench** is a comprehensive evaluation benchmark designed to assess the OCR capabilities of Large Multimodal Models. It comprises five components: Text Recognition, SceneText-Centric VQA, Document-Oriented VQA, Key Information Extraction, and Handwritten Mathematical Expression Recognition. The benchmark includes 1000 question-answer pairs, and all the answers undergo manual verification and correction to ensure a more precise evaluation. More details can be found in [OCRBench README](./OCRBench/README.md).

From c07fedbb47dfcaef2e03b642718d4c2205625fbf Mon Sep 17 00:00:00 2001

From: qywh2023 <134821122+qywh2023@users.noreply.github.com>

Date: Fri, 20 Jun 2025 23:42:58 +0800

Subject: [PATCH 16/16] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 5d8467b..5e894e5 100644

--- a/README.md

+++ b/README.md

@@ -10,7 +10,6 @@ OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Vis

[](https://huggingface.co/datasets/ling99/OCRBench_v2)

[](https://github.com/Yuliang-Liu/MultimodalOCR/issues?q=is%3Aopen+is%3Aissue)

[](https://github.com/Yuliang-Liu/MultimodalOCR/issues?q=is%3Aissue+is%3Aclosed)

-[](https://github.com/Yuliang-Liu/MultimodalOCR)